Screening vs diagnostic testing US Medical PG Practice Questions and MCQs

Practice US Medical PG questions for Screening vs diagnostic testing. These multiple choice questions (MCQs) cover important concepts and help you prepare for your exams.

Screening vs diagnostic testing US Medical PG Question 1: A mother presents to the family physician with her 16-year-old son. She explains, "There's something wrong with him doc. His grades are getting worse, he's cutting class, he's gaining weight, and his eyes are often bloodshot." Upon interviewing the patient apart from his mother, he seems withdrawn and angry at times when probed about his social history. The patient denies abuse and sexual history. What initial test should be sent to rule out the most likely culprit of this patient's behavior?

- A. Complete blood count

- B. Sexually transmitted infection (STI) testing

- C. Blood culture

- D. Urine toxicology screen (Correct Answer)

- E. Slit lamp examination

Screening vs diagnostic testing Explanation: ***Urine toxicology screen***

- The patient's presentation with **declining grades**, **cutting class**, **weight gain**, **bloodshot eyes**, and **irritability** are classic signs of **substance abuse** in an adolescent.

- A **urine toxicology screen** is the most appropriate initial test to detect common illicit substances, especially given the clear signs pointing towards drug use.

*Slit lamp examination*

- This test is used to examine the **anterior segment of the eye**, including the conjunctiva, cornea, iris, and lens.

- While the patient has **bloodshot eyes**, this specific test would be more relevant for ruling out ocular infections or injuries, not for diagnosing the underlying cause of systemic behavioral changes.

*Complete blood count*

- A **complete blood count (CBC)** measures different components of the blood, such as red blood cells, white blood cells, and platelets.

- A CBC is a general health indicator and while it can detect infections or anemia, it is not specific or sensitive enough to identify the cause of the behavioral changes described.

*Sexually transmitted infection (STI) testing*

- Although the patient denies sexual history, all adolescents presenting with certain risk factors or symptoms may warrant STI testing in a broader health assessment.

- However, in this scenario, the primary cluster of symptoms (poor grades, cutting class, bloodshot eyes, irritability) points more directly to substance abuse than to an STI.

*Blood culture*

- A **blood culture** is used to detect the presence of bacteria or other microorganisms in the bloodstream, indicating a systemic infection (sepsis).

- The patient's symptoms are not indicative of an acute bacterial bloodstream infection, and a blood culture would not be the initial test for the presented behavioral changes.

Screening vs diagnostic testing US Medical PG Question 2: A home drug screening test kit is currently being developed. The cut-off level is initially set at 4 mg/uL, which is associated with a sensitivity of 92% and a specificity of 97%. How might the sensitivity and specificity of the test change if the cut-off level is changed to 2 mg/uL?

- A. Sensitivity = 92%, specificity = 97%

- B. Sensitivity = 95%, specificity = 98%

- C. Sensitivity = 100%, specificity = 97%

- D. Sensitivity = 90%, specificity = 99%

- E. Sensitivity = 97%, specificity = 96% (Correct Answer)

Screening vs diagnostic testing Explanation: ***Sensitivity = 97%, specificity = 96%***

- Lowering the cut-off from 4 mg/uL to 2 mg/uL means that more individuals will be classified as **positive** (anyone with drug levels ≥2 mg/uL instead of ≥4 mg/uL). This change will **increase the sensitivity** (capturing more true positives, fewer false negatives) but **decrease the specificity** (more false positives among those without the condition).

- Therefore, sensitivity will increase (e.g., to 97%), and specificity will decrease (e.g., to 96%), reflecting the fundamental trade-off between these metrics.

*Sensitivity = 92%, specificity = 97%*

- This option reflects the **original values** at the 4 mg/uL cut-off and does not account for the change in the threshold.

- A change in the cut-off level will inherently alter the test's performance characteristics.

*Sensitivity = 95%, specificity = 98%*

- This option suggests an increase in **both sensitivity and specificity**, which is generally not possible by simply changing the cut-off level in the same direction.

- There is typically an **inverse relationship** between sensitivity and specificity when adjusting the cut-off threshold.

*Sensitivity = 100%, specificity = 97%*

- Reaching **100% sensitivity** while maintaining a high specificity is highly unlikely with a simple cut-off adjustment.

- While sensitivity would increase with a lower cut-off, achieving perfect sensitivity is unrealistic in clinical practice.

*Sensitivity = 90%, specificity = 99%*

- This option suggests a **decrease in sensitivity** and an **increase in specificity**.

- A lower cut-off would lead to more positive results, thus increasing sensitivity and reducing specificity, which contradicts the proposed values.

Screening vs diagnostic testing US Medical PG Question 3: You are developing a new diagnostic test to identify patients with disease X. Of 100 patients tested with the gold standard test, 10% tested positive. Of those that tested positive, the experimental test was positive for 90% of those patients. The specificity of the experimental test is 20%. What is the positive predictive value of this new test?

- A. 10%

- B. 90%

- C. 95%

- D. 11% (Correct Answer)

- E. 20%

Screening vs diagnostic testing Explanation: ***11%***

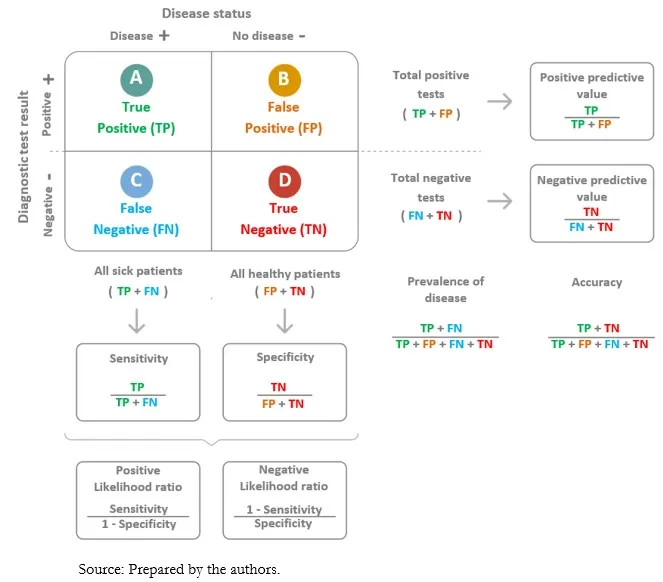

- The positive predictive value (PPV) is calculated as **true positives / (true positives + false positives)**.

- From 100 patients, 10 have disease (prevalence 10%). With 90% sensitivity, the test correctly identifies **9 true positives** (90% of 10).

- Of 90 patients without disease, specificity of 20% means 20% are correctly identified as negative (18 true negatives), so **72 false positives** = 90 × (1 - 0.20).

- Therefore, PPV = 9 / (9 + 72) = 9/81 = **11.1% ≈ 11%**.

*10%*

- This value represents the **prevalence** of the disease in the population, not the positive predictive value of the test.

- Prevalence is the proportion of individuals who have the disease (10 out of 100 patients).

*90%*

- This figure represents the **sensitivity** of the test, which is the percentage of true positives correctly identified by the experimental test.

- Sensitivity = true positives / (true positives + false negatives) = 9/10 = 90%.

*95%*

- This value is not directly derivable from the given data and does not represent any standard test characteristic in this context.

- It would imply a much higher PPV than what can be calculated given the low specificity of 20%.

*20%*

- This is the stated **specificity** of the test, which measures the proportion of true negatives correctly identified.

- Specificity = true negatives / (true negatives + false positives) = 18/90 = 20%.

Screening vs diagnostic testing US Medical PG Question 4: A scientist in Boston is studying a new blood test to detect Ab to the parainfluenza virus with increased sensitivity and specificity. So far, her best attempt at creating such an exam reached 82% sensitivity and 88% specificity. She is hoping to increase these numbers by at least 2 percent for each value. After several years of work, she believes that she has actually managed to reach a sensitivity and specificity even greater than what she had originally hoped for. She travels to South America to begin testing her newest blood test. She finds 2,000 patients who are willing to participate in her study. Of the 2,000 patients, 1,200 of them are known to be infected with the parainfluenza virus. The scientist tests these 1,200 patients’ blood and finds that only 120 of them tested negative with her new test. Of the following options, which describes the sensitivity of the test?

- A. 82%

- B. 86%

- C. 98%

- D. 90% (Correct Answer)

- E. 84%

Screening vs diagnostic testing Explanation: ***90%***

- **Sensitivity** is calculated as the number of **true positives** divided by the total number of individuals with the disease (true positives + false negatives).

- In this scenario, there were 1200 infected patients (total diseased), and 120 of them tested negative (false negatives). Therefore, 1200 - 120 = 1080 patients tested positive (true positives). The sensitivity is 1080 / 1200 = 0.90, or **90%**.

*82%*

- This value was the **original sensitivity** of the test before the scientist improved it.

- The question states that the scientist believes she has achieved a sensitivity "even greater than what she had originally hoped for."

*86%*

- This value is not directly derivable from the given data for the new test's sensitivity.

- It might represent an intermediate calculation or an incorrect interpretation of the provided numbers.

*98%*

- This would imply only 24 false negatives out of 1200 true disease cases, which is not the case (120 false negatives).

- A sensitivity of 98% would be significantly higher than the calculated 90% and the initial stated values.

*84%*

- This value is not derived from the presented data regarding the new test's performance.

- It could be mistaken for an attempt to add 2% to the original 82% sensitivity, but the actual data from the new test should be used.

Screening vs diagnostic testing US Medical PG Question 5: You conduct a medical research study to determine the screening efficacy of a novel serum marker for colon cancer. The study is divided into 2 subsets. In the first, there are 500 patients with colon cancer, of which 450 are found positive for the novel serum marker. In the second arm, there are 500 patients who do not have colon cancer, and only 10 are found positive for the novel serum marker. What is the overall sensitivity of this novel test?

- A. 450 / (450 + 10)

- B. 490 / (10 + 490)

- C. 490 / (50 + 490)

- D. 450 / (450 + 50) (Correct Answer)

- E. 490 / (450 + 490)

Screening vs diagnostic testing Explanation: ***450 / (450 + 50)***

- **Sensitivity** is defined as the proportion of actual positive cases that are correctly identified by the test.

- In this study, there are **500 patients with colon cancer** (actual positives), and **450 of them tested positive** for the marker, while **50 tested negative** (500 - 450 = 50). Therefore, sensitivity = 450 / (450 + 50) = 450/500 = 0.9 or 90%.

*450 / (450 + 10)*

- This formula represents **Positive Predictive Value (PPV)**, which is the probability that a person with a positive test result actually has the disease.

- It incorrectly uses the total number of **test positives** in the denominator (450 true positives + 10 false positives) instead of the total number of diseased individuals, which is needed for sensitivity.

*490 / (10 + 490)*

- This is actually the correct formula for **specificity**, not sensitivity.

- Specificity = TN / (FP + TN) = 490 / (10 + 490) = 490/500 = 0.98 or 98%, which measures the proportion of actual negative cases correctly identified.

- The question asks for sensitivity, not specificity.

*490 / (50 + 490)*

- This formula incorrectly mixes **true negatives (490)** with **false negatives (50)** in an attempt to calculate specificity.

- The correct specificity formula should use false positives (10), not false negatives (50), in the denominator: 490 / (10 + 490).

*490 / (450 + 490)*

- This calculation incorrectly combines **true negatives (490)** and **true positives (450)** in the denominator, which does not correspond to any standard epidemiological measure.

- Neither sensitivity nor specificity uses both true positives and true negatives in the denominator.

Screening vs diagnostic testing US Medical PG Question 6: The APPLE study investigators are currently preparing for a 30-year follow-up evaluation. They are curious about the number of participants who will partake in follow-up interviews. The investigators noted that of the 83 participants who participated in the APPLE study's 20-year follow-up, 62 were in the treatment group and 21 were in the control group. Given the unequal distribution of participants between groups at follow-up, this finding raises concerns for which of the following?

- A. Volunteer bias

- B. Reporting bias

- C. Inadequate sample size

- D. Attrition bias (Correct Answer)

- E. Lead-time bias

Screening vs diagnostic testing Explanation: ***Attrition bias***

- **Attrition bias** occurs when participants drop out of a study, especially if the dropout rate differs between the intervention and control groups, which can lead to a **skewed comparison** of outcomes.

- The unequal distribution of participants (62 vs. 21) between the treatment and control groups at the 20-year follow-up suggests that a disproportionate number of participants may have dropped out of one group, thus leading to attrition bias.

*Volunteer bias*

- **Volunteer bias** occurs when individuals who volunteer for a study differ significantly from the general population or those who decline to participate, potentially affecting the study's **generalizability**.

- This scenario describes differences in retention *after* initial participation, not differences in initial willingness to join.

*Reporting bias*

- **Reporting bias** refers to the selective reporting of study findings, where positive or statistically significant results are more likely to be published or emphasized than negative or non-significant ones, which can distort the overall evidence base.

- This bias relates to how results are disseminated, not to differential dropout rates or participant retention in a study.

*Inadequate sample size*

- **Inadequate sample size** means that the number of participants in a study is too small to detect a statistically significant effect if one truly exists, leading to a lack of **statistical power**.

- While the overall number of participants at follow-up might be small, the primary concern here is the *unequal distribution* between groups, indicating a problem with participant retention rather than just a low total count.

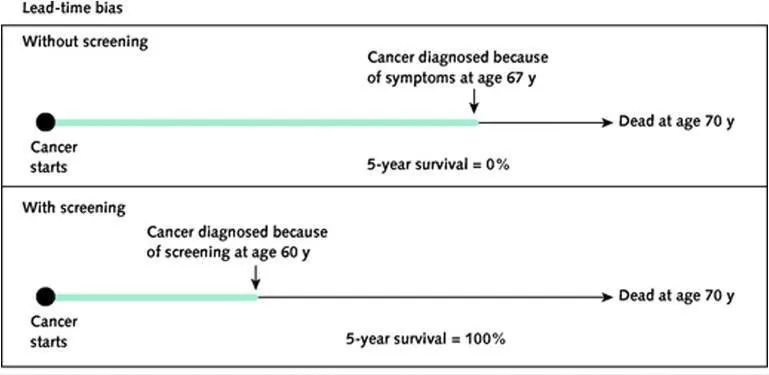

*Lead-time bias*

- **Lead-time bias** occurs when early detection of a disease (e.g., through screening) makes survival appear longer than it actually is, without necessarily prolonging the patient's life, by advancing the **point of diagnosis**.

- This bias is relevant to screening programs and disease detection, not to the differential dropout rates observed in a longitudinal study.

Screening vs diagnostic testing US Medical PG Question 7: Study X examined the relationship between coffee consumption and lung cancer. The authors of Study X retrospectively reviewed patients' reported coffee consumption and found that drinking greater than 6 cups of coffee per day was associated with an increased risk of developing lung cancer. However, Study X was criticized by the authors of Study Y. Study Y showed that increased coffee consumption was associated with smoking. What type of bias affected Study X, and what study design is geared to reduce the chance of that bias?

- A. Observer bias; double blind analysis

- B. Selection bias; randomization

- C. Lead time bias; placebo

- D. Measurement bias; blinding

- E. Confounding; randomization (Correct Answer)

Screening vs diagnostic testing Explanation: ***Confounding; randomization***

- Study Y suggests that **smoking** is a **confounding variable** because it is associated with both increased coffee consumption (exposure) and increased risk of lung cancer (outcome), distorting the apparent relationship between coffee and lung cancer.

- **Randomization** in experimental studies (such as randomized controlled trials) helps reduce confounding by ensuring that known and unknown confounding factors are evenly distributed among study groups.

- In observational studies where randomization is not possible, confounding can be addressed through **stratification**, **matching**, or **multivariable adjustment** during analysis.

*Observer bias; double blind analysis*

- **Observer bias** occurs when researchers' beliefs or expectations influence the study outcome, which is not the primary issue described here regarding the relationship between coffee, smoking, and lung cancer.

- **Double-blind analysis** is a method to mitigate observer bias by ensuring neither participants nor researchers know who is in the control or experimental groups.

*Selection bias; randomization*

- **Selection bias** happens when the study population is not representative of the target population, leading to inaccurate results, which is not directly indicated by the interaction between coffee and smoking.

- While **randomization** is used to reduce selection bias by creating comparable groups, the core problem identified in Study X is confounding, not flawed participant selection.

*Lead time bias; placebo*

- **Lead time bias** occurs in screening programs when early detection without improved outcomes makes survival appear longer, an issue unrelated to the described association between coffee, smoking, and lung cancer.

- A **placebo** is an inactive treatment used in clinical trials to control for psychological effects, and its relevance here is limited to treatment intervention studies.

*Measurement bias; blinding*

- **Measurement bias** arises from systematic errors in data collection, such as inaccurate patient reporting of coffee consumption, but the main criticism from Study Y points to a third variable (smoking) affecting the association, not just flawed measurement.

- **Blinding** helps reduce measurement bias by preventing participants or researchers from knowing group assignments, thus minimizing conscious or unconscious influences on data collection.

Screening vs diagnostic testing US Medical PG Question 8: A research study is comparing 2 novel tests for the diagnosis of Alzheimer’s disease (AD). The first is a serum blood test, and the second is a novel PET radiotracer that binds to beta-amyloid plaques. The researchers intend to have one group of patients with AD assessed via the novel blood test, and the other group assessed via the novel PET examination. In comparing these 2 trial subsets, the authors of the study may encounter which type of bias?

- A. Selection bias (Correct Answer)

- B. Confounding bias

- C. Recall bias

- D. Measurement bias

- E. Lead-time bias

Screening vs diagnostic testing Explanation: ***Selection bias***

- This occurs when different patient groups are assigned to different interventions or measurements in a way that creates **systematic differences** between comparison groups.

- In this study, having **separate patient groups** assessed with different diagnostic methods (blood test vs. PET scan) means any differences observed could be due to **differences in the patient populations** rather than differences in test performance.

- To validly compare two diagnostic tests, both tests should ideally be performed on the **same patients** (paired design) or patients should be **randomly assigned** to receive one test or the other, ensuring comparable groups.

- This is a fundamental **study design flaw** that prevents valid comparison of the two diagnostic methods.

*Measurement bias*

- Also called information bias, this occurs when there are systematic errors in how outcomes or exposures are measured.

- While using different measurement tools could introduce measurement variability, the primary issue here is that **different patient populations** are being compared, not just different measurement methods on the same population.

- Measurement bias would be more relevant if the same patients were assessed with both methods but one method was systematically misapplied or measured incorrectly.

*Confounding bias*

- This occurs when an extraneous variable is associated with both the exposure and outcome, distorting the observed relationship.

- While patient characteristics could confound results, the fundamental problem is the **study design itself** (separate groups for separate tests), which is selection bias.

*Recall bias*

- This involves systematic differences in how participants remember or report past events, common in **retrospective case-control studies**.

- Not relevant here, as this involves prospective diagnostic testing, not recollection of past exposures.

*Lead-time bias*

- Occurs in screening studies when earlier detection makes survival appear longer without changing disease outcomes.

- Not applicable to this scenario, which focuses on comparing two diagnostic methods in separate patient groups, not on survival or disease progression timing.

Screening vs diagnostic testing US Medical PG Question 9: A medical research study is beginning to evaluate the positive predictive value of a novel blood test for non-Hodgkin’s lymphoma. The diagnostic arm contains 700 patients with NHL, of which 400 tested positive for the novel blood test. In the control arm, 700 age-matched control patients are enrolled and 0 are found positive for the novel test. What is the PPV of this test?

- A. 400 / (400 + 0) (Correct Answer)

- B. 700 / (700 + 300)

- C. 400 / (400 + 300)

- D. 700 / (700 + 0)

- E. 700 / (400 + 400)

Screening vs diagnostic testing Explanation: ***400 / (400 + 0) = 1.0 or 100%***

- The **positive predictive value (PPV)** is calculated as **True Positives / (True Positives + False Positives)**.

- In this scenario, **True Positives (TP)** are the 400 patients with NHL who tested positive, and **False Positives (FP)** are 0, as no control patients tested positive.

- This gives a PPV of 400/400 = **1.0 or 100%**, indicating that all patients who tested positive actually had the disease.

*700 / (700 + 300)*

- This calculation does not align with the formula for PPV based on the given data.

- The denominator `(700+300)` suggests an incorrect combination of various patient groups.

*400 / (400 + 300)*

- The denominator `(400+300)` incorrectly includes 300, which is the number of **False Negatives** (patients with NHL who tested negative), not False Positives.

- PPV focuses on the proportion of true positives among all positive tests, not all diseased individuals.

*700 / (700 + 0)*

- This calculation incorrectly uses the total number of patients with NHL (700) as the numerator, rather than the number of positive test results in that group.

- The numerator should be the **True Positives** (400), not the total number of diseased individuals.

*700 / (400 + 400)*

- This calculation uses incorrect values for both the numerator and denominator, not corresponding to the PPV formula.

- The numerator 700 represents the total number of patients with the disease, not those who tested positive, and the denominator incorrectly sums up values that don't represent the proper PPV calculation.

Screening vs diagnostic testing US Medical PG Question 10: A 57-year-old man presents to his oncologist to discuss management of small cell lung cancer. The patient is a lifelong smoker and was diagnosed with cancer 1 week ago. The patient states that the cancer was his fault for smoking and that there is "no hope now." He seems disinterested in discussing the treatment options and making a plan for treatment and followup. The patient says "he does not want any treatment" for his condition. Which of the following is the most appropriate response from the physician?

- A. "You seem upset at the news of this diagnosis. I want you to go home and discuss this with your loved ones and come back when you feel ready to make a plan together for your care."

- B. "It must be tough having received this diagnosis; however, new cancer therapies show increased efficacy and excellent outcomes."

- C. "It must be very challenging having received this diagnosis. I want to work with you to create a plan." (Correct Answer)

- D. "We are going to need to treat your lung cancer. I am here to help you throughout the process."

- E. "I respect your decision and we will not administer any treatment. Let me know if I can help in any way."

Screening vs diagnostic testing Explanation: ***"It must be very challenging having received this diagnosis. I want to work with you to create a plan."***

- This response **acknowledges the patient's emotional distress** and feelings of guilt and hopelessness, which is crucial for building rapport and trust.

- It also gently **re-engages the patient** by offering a collaborative approach to treatment, demonstrating the physician's commitment to supporting him through the process.

*"You seem upset at the news of this diagnosis. I want you to go home and discuss this with your loved ones and come back when you feel ready to make a plan together for your care."*

- While acknowledging distress, sending the patient home without further engagement **delays urgent care** for small cell lung cancer, which is aggressive.

- This response might be perceived as dismissive of his immediate feelings and can **exacerbate his sense of hopelessness** and isolation.

*"It must be tough having received this diagnosis; however, new cancer therapies show increased efficacy and excellent outcomes."*

- This statement moves too quickly to treatment efficacy without adequately addressing the patient's current **emotional state and fatalism**.

- While factual, it **lacks empathy** for his personal feelings of blame and hopelessness, potentially making him feel unheard.

*"We are going to need to treat your lung cancer. I am here to help you throughout the process."*

- This response is **too directive and authoritarian**, which can alienate a patient who is already feeling guilty and resistant to treatment.

- It fails to acknowledge his stated feelings of "no hope now" or his disinterest in treatment, which are critical to address before discussing the necessity of treatment.

*"I respect your decision and we will not administer any treatment. Let me know if I can help in any way."*

- While respecting patient autonomy is vital, immediately accepting a patient's decision to refuse treatment without exploring the underlying reasons (e.g., guilt, hopelessness, lack of information) is **premature and potentially harmful**.

- The physician has a responsibility to ensure the patient is making an informed decision, especially for a rapidly progressing condition like small cell lung cancer.

More Screening vs diagnostic testing US Medical PG questions available in the OnCourse app. Practice MCQs, flashcards, and get detailed explanations.