Study Design

On this page

🎯 Research Design Mastery: The Clinical Investigation Blueprint

Every medical breakthrough-from life-saving drugs to practice-changing guidelines-rests on the foundation of rigorous study design, yet flawed methodology can mislead entire fields for decades. You'll master the complete architecture of clinical research, learning how to construct gold-standard experiments, interpret observational studies, select the right design for each question, and identify the biases that threaten validity. By understanding why researchers choose specific designs and how each approach shapes the strength of evidence, you'll transform from passive consumer to critical architect of medical knowledge.

Study design mastery enables clinicians to critically evaluate medical literature, design robust clinical investigations, and translate research findings into patient care decisions. The systematic approach to research methodology forms the foundation for evidence-based clinical practice.

📌 Remember: FINER criteria for research questions - Feasible, Interesting, Novel, Ethical, Relevant. Every strong study begins with a question meeting all 5 criteria.

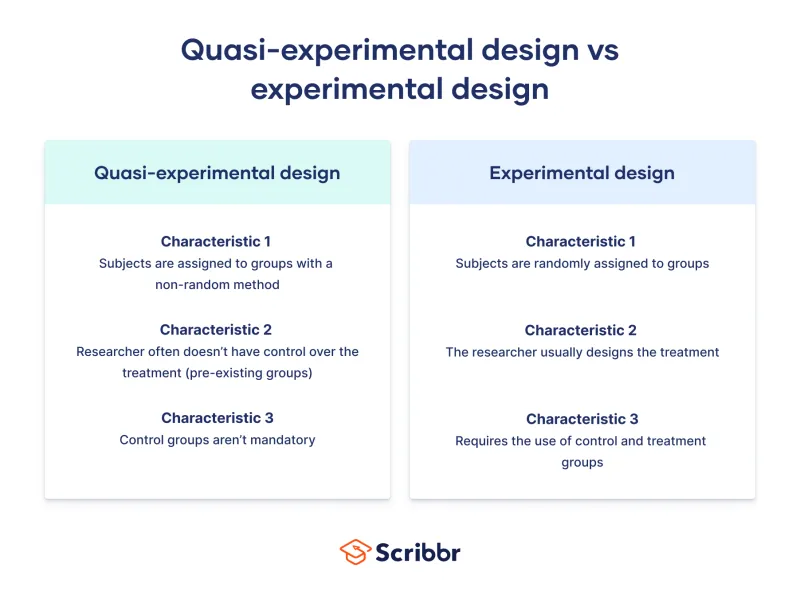

Research design selection depends on the clinical question type, available resources, ethical constraints, and desired evidence level. Experimental designs provide the strongest causal evidence but require randomization and control groups. Observational studies examine natural disease patterns but cannot establish causation directly.

- Experimental Studies

- Randomized Controlled Trials: Level 1 evidence with >95% confidence intervals

- Quasi-experimental: Level 2 evidence without true randomization

- Non-randomized controlled trials: 80-90% reliability for causal inference

- Before-after studies: 60-75% validity depending on confounders

- Observational Studies

- Cohort studies: Level 3 evidence tracking prospective outcomes

- Case-control studies: Level 4 evidence with retrospective analysis

- Odds ratios: Calculate disease-exposure associations

- Relative risk: Quantify outcome probability differences

⭐ Clinical Pearl: Randomized controlled trials eliminate selection bias and confounding variables through random allocation, providing causal evidence with statistical significance typically set at p<0.05.

| Study Design | Evidence Level | Causal Inference | Sample Size | Timeline | Cost Factor |

|---|---|---|---|---|---|

| Systematic Review | 1a | Strongest | 1000+ studies | 6-12 months | Low |

| RCT | 1b | Strong | 100-10,000 | 1-5 years | High |

| Cohort | 2a | Moderate | 500-50,000 | 5-20 years | Moderate |

| Case-Control | 3a | Limited | 50-5,000 | 6-24 months | Low |

| Cross-sectional | 4 | None | 100-100,000 | 1-6 months | Low |

The research question structure using PICO framework (Population, Intervention, Comparison, Outcome) determines the optimal study design. Population characteristics influence sampling strategies, intervention complexity affects randomization feasibility, comparison groups determine control requirements, and outcome measurement guides follow-up duration.

Connect these foundational principles through systematic design selection to understand how research methodology transforms clinical hypotheses into evidence-based medical knowledge.

🎯 Research Design Mastery: The Clinical Investigation Blueprint

🔬 Experimental Design Architecture: The Gold Standard Framework

Randomized Controlled Trials represent the gold standard for clinical research, providing Level 1 evidence through systematic elimination of bias sources. Simple randomization uses computer-generated sequences, block randomization ensures equal group sizes, and stratified randomization balances important covariates.

📌 Remember: CONSORT guidelines for RCT reporting - Consolidated Standards Of Reporting Trials require 25 essential elements including randomization method, allocation concealment, blinding procedures, and intention-to-treat analysis.

- Randomization Methods

- Simple randomization: 50% probability for each allocation

- Block randomization: Blocks of 4-8 participants ensure balance

- Fixed blocks: Predictable but balanced allocation

- Variable blocks: Unpredictable allocation with maintained balance

- Stratified randomization: Separate sequences for important subgroups

- Age stratification: <65 years vs ≥65 years

- Disease severity: Mild, moderate, severe categories

Blinding procedures prevent performance bias and detection bias by concealing treatment allocation from participants, investigators, or outcome assessors. Single-blind studies hide allocation from participants only, double-blind studies conceal from participants and investigators, triple-blind includes data analysts.

⭐ Clinical Pearl: Allocation concealment differs from blinding - concealment prevents selection bias during enrollment, while blinding prevents performance and detection bias during follow-up. Both are essential for high-quality RCTs.

| RCT Component | Purpose | Implementation | Bias Prevention | Success Rate |

|---|---|---|---|---|

| Randomization | Equal allocation | Computer sequence | Selection bias | 95-99% |

| Allocation concealment | Hide assignment | Sealed envelopes | Selection bias | 85-95% |

| Blinding participants | Mask treatment | Placebo control | Performance bias | 70-90% |

| Blinding investigators | Mask treatment | Identical preparations | Performance bias | 80-95% |

| Blinding assessors | Mask outcomes | Independent evaluation | Detection bias | 90-98% |

💡 Master This: Internal validity measures causal inference strength within the study population, while external validity determines generalizability to broader clinical populations. Randomization maximizes internal validity, representative sampling enhances external validity.

Sample size calculations for RCTs require effect size estimation, statistical power (typically 80-90%), significance level (usually α=0.05), and expected dropout rate (10-20% in most clinical trials). Underpowered studies risk Type II errors, while overpowered studies waste resources and may detect clinically insignificant differences.

Connect experimental design principles through bias control mechanisms to understand how methodological rigor transforms clinical hypotheses into reliable evidence for patient care decisions.

🔬 Experimental Design Architecture: The Gold Standard Framework

🔍 Observational Study Mastery: The Natural History Detectives

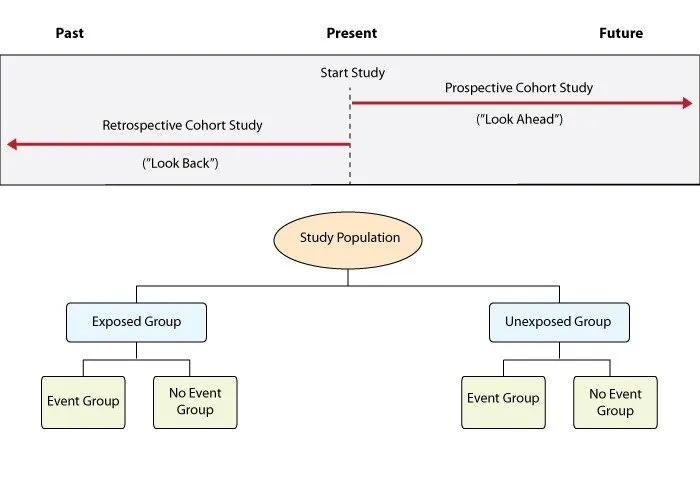

Cohort studies follow exposed and unexposed groups prospectively to measure outcome incidence. Prospective cohorts collect real-time data with minimal recall bias, while retrospective cohorts use existing records with reduced cost but potential information bias.

📌 Remember: Bradford Hill criteria for causation assessment - Strength, Consistency, Temporal relationship, Dose-response, Plausibility, Coherence, Experimental evidence, Analogy, Reversibility. 9 criteria guide causal inference from observational data.

- Cohort Study Advantages

- Temporal sequence: Exposure precedes outcome by months to decades

- Multiple outcomes: Single cohort examines 5-10 different diseases

- Framingham Study: >70 years follow-up, >3,000 publications

- Nurses' Health Study: >40 years, >280,000 participants

- Incidence calculation: True disease rates in exposed populations

- Relative risk: RR = Incidence exposed / Incidence unexposed

- Attributable risk: AR = Incidence exposed - Incidence unexposed

Case-control studies compare exposure histories between cases (with disease) and controls (without disease), working backwards from outcome to exposure. This retrospective approach enables rapid completion and cost efficiency for rare diseases.

⭐ Clinical Pearl: Case-control studies calculate odds ratios (OR), not relative risk. When disease is rare (<10% prevalence), OR approximates RR. For common diseases, OR overestimates RR.

| Study Design | Measure | Formula | Interpretation | Best Use |

|---|---|---|---|---|

| Cohort | Relative Risk | RR = a/(a+b) ÷ c/(c+d) | Risk ratio | Common outcomes |

| Case-Control | Odds Ratio | OR = (a×d)/(b×c) | Odds ratio | Rare diseases |

| Cross-sectional | Prevalence Ratio | PR = Prevalence exposed/unexposed | Point prevalence | Disease burden |

| Ecological | Correlation | r = Σ(x-x̄)(y-ȳ)/√Σ(x-x̄)²Σ(y-ȳ)² | Population association | Hypothesis generation |

Selection bias threatens observational studies through non-representative sampling. Berkson's bias occurs in hospital-based studies, healthy worker effect affects occupational cohorts, and loss to follow-up creates attrition bias in longitudinal studies.

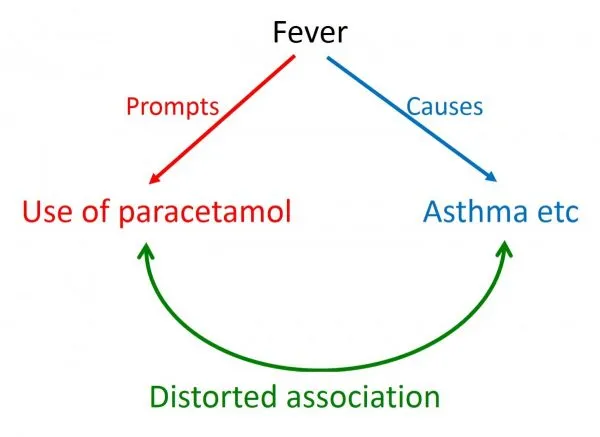

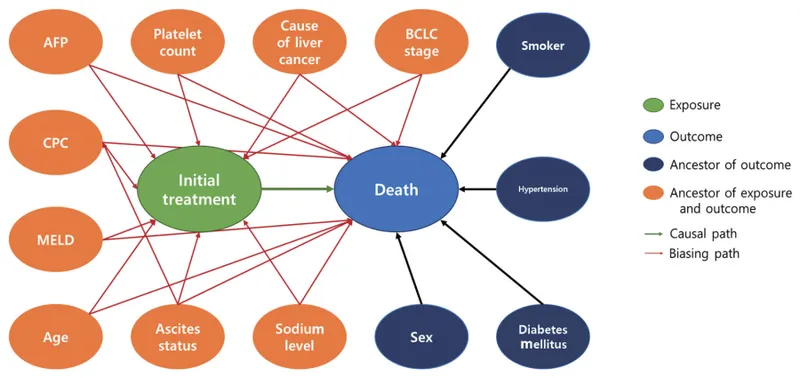

💡 Master This: Confounding variables associate with both exposure and outcome but are not intermediate steps in the causal pathway. Age, sex, socioeconomic status, and comorbidities are common confounders requiring statistical adjustment or matching.

- Bias Control Strategies

- Selection bias: Random sampling, population-based recruitment

- Information bias: Standardized instruments, blinded assessors

- Recall bias: Objective measures, medical records

- Interviewer bias: Structured protocols, training programs

- Confounding: Matching, stratification, multivariable analysis

- Propensity scores: Balance covariates between comparison groups

- Instrumental variables: Natural randomization for causal inference

Ecological studies examine population-level associations between aggregate exposures and outcomes. These studies generate hypotheses efficiently but suffer from ecological fallacy - group-level associations may not reflect individual-level relationships.

Connect observational study principles through bias recognition patterns to understand how natural disease surveillance provides complementary evidence to experimental research for comprehensive clinical knowledge.

🔍 Observational Study Mastery: The Natural History Detectives

⚖️ Study Selection Strategy: The Evidence Architecture Decision Matrix

Research question hierarchy determines design appropriateness through PICO framework analysis. Population characteristics influence sampling strategies, intervention complexity affects randomization feasibility, comparison requirements determine control group needs, and outcome rarity guides study efficiency.

📌 Remember: Study design hierarchy for evidence strength - Systematic reviews > RCTs > Cohort studies > Case-control studies > Cross-sectional studies > Case series > Expert opinion. Higher levels provide stronger causal inference.

- Question-Design Matching

- Therapy questions: RCTs provide Level 1 evidence for treatment efficacy

- Superiority trials: New treatment better than standard care

- Non-inferiority trials: New treatment not worse than standard by pre-specified margin

- Equivalence trials: Treatments clinically equivalent within narrow bounds

- Prognosis questions: Cohort studies track natural history over time

- Inception cohorts: Early disease stages for complete natural history

- Survival analysis: Time-to-event outcomes with censoring

- Diagnosis questions: Cross-sectional studies compare test performance

- Sensitivity: True positive rate = TP/(TP+FN)

- Specificity: True negative rate = TN/(TN+FP)

- Therapy questions: RCTs provide Level 1 evidence for treatment efficacy

Ethical constraints limit experimental designs for harmful exposures or vulnerable populations. Observational studies provide alternative approaches when randomization is unethical or unfeasible.

⭐ Clinical Pearl: Equipoise principle requires genuine uncertainty about treatment superiority before randomization is ethical. When strong evidence favors one treatment, RCTs become unethical.

| Research Question | Optimal Design | Evidence Level | Timeline | Sample Size | Key Advantage |

|---|---|---|---|---|---|

| Treatment efficacy | RCT | 1b | 1-5 years | 100-10,000 | Causal inference |

| Risk factors | Cohort | 2a | 5-20 years | 1,000-100,000 | Temporal sequence |

| Rare disease etiology | Case-control | 3a | 6-24 months | 50-1,000 | Efficiency |

| Disease prevalence | Cross-sectional | 4 | 1-6 months | 500-50,000 | Rapid assessment |

| Hypothesis generation | Ecological | 5 | 1-3 months | Population data | Low cost |

Sample size requirements vary dramatically across design types. RCTs need hundreds to thousands of participants for adequate power, cohort studies require thousands to hundreds of thousands for rare outcomes, case-control studies achieve efficiency with smaller samples for rare diseases.

💡 Master This: Study design selection balances scientific rigor, ethical feasibility, resource constraints, and clinical relevance. The optimal design maximizes evidence quality within practical limitations.

- Design Selection Criteria

- Scientific rigor: Bias minimization, confounding control

- Ethical feasibility: Harm-benefit ratio, vulnerable populations

- Therapeutic misconception: Participants confuse research with clinical care

- Informed consent: Voluntary, informed, ongoing agreement

- Resource efficiency: Cost-effectiveness, timeline constraints

- Pilot studies: 10-20% of main study size for feasibility testing

- Adaptive designs: Interim analyses allow protocol modifications

- Clinical relevance: Patient-important outcomes, practice-changing potential

Multi-center studies enhance external validity through diverse populations and varied practice settings. International collaborations increase sample sizes and generalizability but introduce regulatory complexity and cultural variations.

Connect study selection principles through evidence optimization strategies to understand how methodological choices determine the reliability and applicability of clinical research findings.

⚖️ Study Selection Strategy: The Evidence Architecture Decision Matrix

🎯 Bias Control Mastery: The Validity Protection System

Bias represents systematic error that creates deviation from truth in study results. Unlike random error (reduced by larger sample sizes), bias requires methodological prevention through study design and analytical techniques.

Selection bias occurs when study participants differ systematically from the target population or when comparison groups differ in prognostic factors. Berkson's bias affects hospital-based studies, healthy worker effect influences occupational cohorts, and volunteer bias impacts recruitment.

📌 Remember: Bias classification - Selection bias (who gets studied), Information bias (how data is collected), Confounding (mixing of effects). Each requires different prevention strategies and analytical approaches.

- Selection Bias Types

- Berkson's bias: Hospital patients differ from general population

- Severity spectrum: Hospitalized cases represent severe disease

- Comorbidity patterns: Multiple conditions increase admission probability

- Healthy worker effect: Employed populations healthier than general population

- Mortality rates: 20-30% lower in working populations

- Disease prevalence: Reduced chronic conditions in active workers

- Loss to follow-up: Differential attrition between comparison groups

- Acceptable rates: <5% minimal bias, 5-20% moderate bias, >20% high bias

- Sensitivity analysis: Best-case/worst-case scenarios for missing data

- Berkson's bias: Hospital patients differ from general population

Information bias results from systematic errors in data collection, measurement, or classification. Recall bias affects retrospective studies, interviewer bias influences data collection, and misclassification bias distorts exposure-outcome relationships.

⭐ Clinical Pearl: Differential misclassification (varies between groups) can bias results toward or away from null hypothesis. Non-differential misclassification (equal across groups) typically biases toward null.

| Bias Type | Mechanism | Prevention Strategy | Detection Method | Impact on Results |

|---|---|---|---|---|

| Recall bias | Memory differences | Objective measures | Validation studies | Away from null |

| Interviewer bias | Systematic questioning | Blinded interviewers | Inter-rater reliability | Variable direction |

| Measurement bias | Instrument error | Calibration protocols | Quality control | Variable direction |

| Misclassification | Wrong categorization | Standardized criteria | Gold standard comparison | Toward null |

| Publication bias | Selective reporting | Trial registries | Funnel plots | Away from null |

Confounding control uses design-based or analysis-based strategies. Randomization distributes confounders equally between groups, matching creates comparable groups, stratification analyzes subgroups separately, and multivariable analysis adjusts for multiple confounders simultaneously.

💡 Master This: Residual confounding persists after statistical adjustment due to unmeasured confounders, measurement error, or inadequate modeling. Sensitivity analyses assess robustness of findings to unmeasured confounding.

- Confounding Control Methods

- Design phase: Randomization, matching, restriction

- Individual matching: 1:1 or 1:many case-control pairs

- Frequency matching: Group-level distribution matching

- Restriction: Limit study to homogeneous population

- Analysis phase: Stratification, multivariable modeling

- Mantel-Haenszel: Stratified analysis for categorical confounders

- Regression modeling: Continuous confounder adjustment

- Propensity scores: Balance covariates in observational studies

- Design phase: Randomization, matching, restriction

Detection strategies identify potential bias through study design evaluation, data pattern analysis, and sensitivity testing. Funnel plots detect publication bias, dose-response relationships support causality, and consistency across studies strengthens evidence.

Bias impact assessment determines magnitude and direction of result distortion. Quantitative bias analysis estimates bias-corrected results, while sensitivity analyses test assumption variations. Multiple bias modeling addresses simultaneous bias sources.

Connect bias control principles through systematic validity protection to understand how methodological rigor ensures reliable clinical evidence for patient care decisions.

🎯 Bias Control Mastery: The Validity Protection System

🔗 Advanced Integration: The Multi-Dimensional Research Ecosystem

Multi-dimensional research integrates quantitative and qualitative methods, individual and population-level data, and experimental and observational evidence to address complex clinical questions. This methodological pluralism provides triangulated evidence stronger than any single study design.

Mixed methods research combines quantitative rigor with qualitative depth, using sequential, concurrent, or transformative approaches. Explanatory designs use qualitative methods to explain quantitative findings, while exploratory designs use quantitative methods to test qualitative insights.

📌 Remember: Triangulation types - Data triangulation (multiple sources), Investigator triangulation (multiple researchers), Theory triangulation (multiple frameworks), Methodological triangulation (multiple methods). 4 approaches strengthen evidence validity.

- Integration Strategies

- Sequential integration: Phase 1 informs Phase 2 design

- Explanatory: RCT followed by interviews to understand mechanisms

- Exploratory: Focus groups followed by survey to test prevalence

- Concurrent integration: Simultaneous data collection and convergent analysis

- Patient-reported outcomes: Quantitative scales plus qualitative narratives

- Implementation research: Effectiveness trials plus process evaluations

- Transformative integration: Social justice framework guides methodology selection

- Community-based participatory research: Stakeholder engagement throughout research process

- Health equity focus: Marginalized populations as research partners

- Sequential integration: Phase 1 informs Phase 2 design

Systematic reviews and meta-analyses represent the highest evidence level by synthesizing multiple studies with statistical pooling. Individual participant data meta-analyses provide greater analytical power than aggregate data approaches.

⭐ Clinical Pearl: Network meta-analysis compares multiple interventions simultaneously using direct and indirect evidence. This approach enables ranking treatments when head-to-head comparisons are limited.

| Integration Method | Purpose | Strength | Limitation | Best Application |

|---|---|---|---|---|

| Mixed methods | Comprehensive understanding | Depth + breadth | Resource intensive | Complex interventions |

| Systematic review | Evidence synthesis | Reduces bias | Publication bias | Treatment guidelines |

| Meta-analysis | Statistical pooling | Increased power | Heterogeneity | Effect size estimation |

| Network meta-analysis | Multiple comparisons | Indirect evidence | Assumption violations | Treatment ranking |

| Individual patient data | Raw data analysis | Subgroup analysis | Data availability | Personalized medicine |

Adaptive trial designs allow protocol modifications based on interim analyses while maintaining statistical validity. Sample size re-estimation, treatment arm dropping, and population enrichment improve trial efficiency and ethical conduct.

💡 Master This: Pragmatic trials maximize external validity through broad inclusion criteria, usual care comparators, and patient-important outcomes. Explanatory trials maximize internal validity through strict protocols and ideal conditions.

- Advanced Design Features

- Adaptive randomization: Response-adaptive allocation favors better-performing arms

- Bayesian updating: Continuous probability revision based on accumulating data

- Futility stopping: Early termination for lack of efficacy

- Platform trials: Multiple treatments tested in single infrastructure

- Master protocols: Shared eligibility and outcome measures

- Perpetual recruitment: New arms added as treatments become available

- Basket trials: Single treatment tested across multiple diseases

- Biomarker-driven: Molecular targets rather than anatomical sites

- Precision medicine: Personalized treatment based on genetic profiles

- Adaptive randomization: Response-adaptive allocation favors better-performing arms

Implementation science studies how evidence-based interventions are adopted, implemented, and sustained in real-world settings. Hybrid designs test effectiveness and implementation strategies simultaneously.

Global health research addresses health disparities through culturally appropriate study designs and community engagement. Participatory research involves local stakeholders as research partners rather than study subjects.

Digital health integration uses mobile technologies, wearable devices, and artificial intelligence to enhance data collection, patient engagement, and outcome measurement. Digital biomarkers provide continuous monitoring and objective assessments.

Connect advanced integration principles through methodological innovation to understand how research evolution addresses increasingly complex clinical questions requiring sophisticated evidence generation approaches.

🔗 Advanced Integration: The Multi-Dimensional Research Ecosystem

🎯 Clinical Research Mastery: The Evidence Generation Toolkit

Research mastery synthesizes methodological knowledge into practical frameworks for study design selection, protocol development, and evidence interpretation. This systematic approach transforms clinical questions into rigorous investigations that advance patient care.

📌 Remember: Research excellence checklist - Clear question, Rigorous design, Ethical conduct, Appropriate analysis, Transparent reporting, Evidence translation. 6 elements ensure high-quality clinical research.

Essential Research Arsenal provides quick-reference tools for immediate application in research planning and literature evaluation:

| Study Design | Primary Use | Sample Size | Timeline | Evidence Level | Key Metric |

|---|---|---|---|---|---|

| RCT | Treatment efficacy | 100-10,000 | 1-5 years | 1b | Relative risk |

| Cohort | Risk factors | 1,000-100,000 | 5-20 years | 2a | Incidence rate |

| Case-control | Rare diseases | 50-1,000 | 6-24 months | 3a | Odds ratio |

| Cross-sectional | Prevalence | 500-50,000 | 1-6 months | 4 | Prevalence ratio |

| Systematic review | Evidence synthesis | 5-50 studies | 6-12 months | 1a | Pooled estimate |

- Statistical power: 80-90% standard for adequate sample size

- Significance level: α = 0.05 conventional threshold for statistical significance

- Effect size: Cohen's d = 0.2 small, 0.5 medium, 0.8 large effect

- Loss to follow-up: <5% minimal bias, >20% threatens validity

- Inter-rater reliability: κ > 0.8 excellent agreement, 0.6-0.8 good agreement

⭐ Clinical Pearl: Number needed to treat (NNT) = 1/Absolute Risk Reduction provides clinically meaningful effect size interpretation. NNT = 5 means treating 5 patients prevents 1 adverse outcome.

Pattern Recognition Framework for study design selection:

- Rapid Assessment Tools

- PICO framework: Population, Intervention, Comparison, Outcome

- Study quality: CONSORT (RCTs), STROBE (observational), PRISMA (reviews)

- Bias assessment: Cochrane Risk of Bias tool for systematic evaluation

- Evidence grading: GRADE system for recommendation strength

💡 Master This: Research translation requires 3 phases - T1 (bench to bedside), T2 (bedside to practice), T3 (practice to population). Each phase needs different study designs and outcome measures.

Clinical Research Commandments for evidence-based practice:

- Match design to question - Intervention questions need experimental designs

- Control for confounding - Randomization or statistical adjustment

- Minimize bias - Blinding, standardization, objective measures

- Ensure adequate power - Sample size calculations before data collection

- Report transparently - Complete methodology and all results

- Consider generalizability - External validity for clinical application

Advanced Integration Strategies:

- Triangulation: Multiple methods strengthen evidence validity

- Replication: Independent studies confirm initial findings

- Meta-analysis: Statistical pooling increases precision

- Implementation research: Real-world effectiveness complements efficacy trials

Future Directions in clinical research include precision medicine, digital health integration, patient-centered outcomes, and global health equity. Artificial intelligence and machine learning will enhance study design, data analysis, and evidence synthesis.

Research mastery transforms clinical curiosity into systematic investigation, generating reliable evidence that improves patient outcomes and advances medical knowledge for future generations of healthcare providers and patients worldwide.

🎯 Clinical Research Mastery: The Evidence Generation Toolkit

Practice Questions: Study Design

Test your understanding with these related questions

A study is funded by the tobacco industry to examine the association between smoking and lung cancer. They design a study with a prospective cohort of 1,000 smokers between the ages of 20-30. The length of the study is five years. After the study period ends, they conclude that there is no relationship between smoking and lung cancer. Which of the following study features is the most likely reason for the failure of the study to note an association between tobacco use and cancer?