Power and sample size

On this page

⚡ The Statistical Power Engine: Precision Medicine's Foundation

Every clinical trial hinges on a deceptively simple question: how many patients do you need to detect a real treatment effect? Master the mathematical architecture of power and sample size calculations, and you'll command the difference between studies that reveal truth and those that waste resources chasing noise. You'll build from core statistical engines through formula precision to advanced multi-system integration, learning to architect studies that balance feasibility with the rigor demanded by evidence-based medicine. This is where statistical theory becomes the foundation of every treatment decision you'll ever make.

📌 Remember: POWER - Probability Of Winning Every Real effect. Power represents the probability (80-90% standard) of detecting a true clinical difference when it actually exists.

The Power Quartet: Four Levers That Control Everything

-

Effect Size (Cohen's d)

- Small effect: d = 0.2 (blood pressure reduction 2-5 mmHg)

- Medium effect: d = 0.5 (cholesterol reduction 15-25%)

- Large effect: d = 0.8 (mortality reduction >30%)

- Clinical significance threshold: d ≥ 0.3 for most interventions

- Pharmaceutical trials typically target: d = 0.4-0.6

-

Sample Size (N)

- Pilot studies: N = 20-50 per group

- Phase II trials: N = 100-300 total

- Phase III trials: N = 1000-5000 total

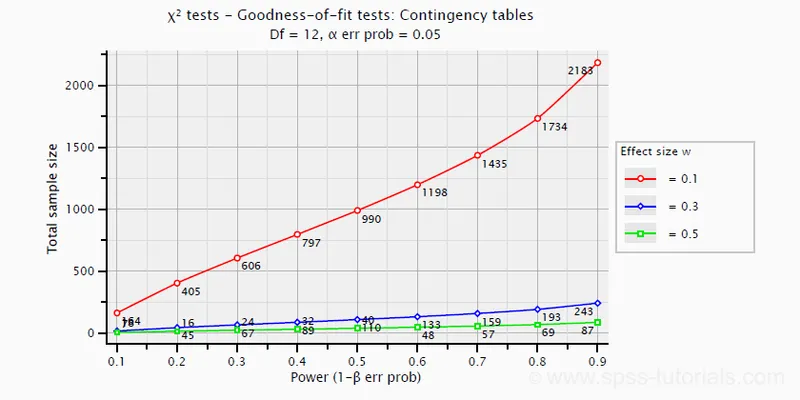

- Doubling sample size increases power by √2 factor

- Quadrupling sample size doubles power detection

-

Alpha Level (Type I Error)

- Standard medical research: α = 0.05 (5% false positive rate)

- Bonferroni correction: α/k where k = number of comparisons

- FDA approval threshold: α = 0.025 (one-sided) or 0.05 (two-sided)

-

Power (1 - β)

- Minimum acceptable: 80% power

- Preferred standard: 90% power

- High-stakes trials: 95% power

| Parameter | Pilot Study | Phase II | Phase III | Meta-Analysis |

|---|---|---|---|---|

| Power Target | 70-80% | 80-85% | 90-95% | 95-99% |

| Alpha Level | 0.05-0.10 | 0.05 | 0.025-0.05 | 0.01-0.05 |

| Effect Size | 0.5-0.8 | 0.3-0.6 | 0.2-0.4 | 0.1-0.3 |

| Sample Size | 20-100 | 100-500 | 1000-10000 | 5000-50000 |

| Cost Range | $50K-200K | $1M-5M | $50M-500M | $100K-1M |

💡 Master This: The power equation relationship: Power ∝ √N × Effect Size × √(1/α). Doubling effect size has 4x more impact than doubling sample size, making precise outcome measurement critical for efficient trial design.

Understanding these four levers provides the foundation for designing studies that reliably detect clinically meaningful differences. The next section reveals how these components interact through the mathematical precision of sample size formulas.

⚡ The Statistical Power Engine: Precision Medicine's Foundation

🧮 Sample Size Formulas: The Mathematical Precision Engine

Continuous Outcomes: The t-Test Foundation

For comparing means between two groups (blood pressure, cholesterol, pain scores):

$$n = \frac{2\sigma^2(Z_{\alpha/2} + Z_\beta)^2}{(\mu_1 - \mu_2)^2}$$

Where:

- σ² = variance (standard deviation squared)

- Z_α/2 = critical value for alpha level (1.96 for α = 0.05)

- Z_β = critical value for beta (0.84 for 80% power, 1.28 for 90% power)

- μ₁ - μ₂ = clinically meaningful difference

📌 Remember: SIGMA - Standard deviation Impacts Greatly Most Analyses. Reducing measurement error by 50% cuts required sample size by 75%.

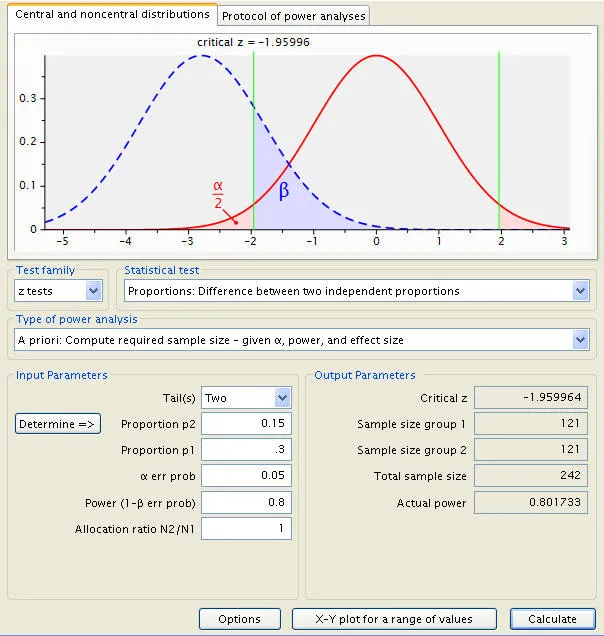

Binary Outcomes: The Proportion Power Formula

For comparing proportions (mortality, response rates, adverse events):

$$n = \frac{(Z_{\alpha/2}\sqrt{2\bar{p}(1-\bar{p})} + Z_\beta\sqrt{p_1(1-p_1) + p_2(1-p_2)})^2}{(p_1 - p_2)^2}$$

Where:

- p₁, p₂ = proportions in each group

- p̄ = pooled proportion = (p₁ + p₂)/2

Sample Size Multipliers by Study Design

-

Parallel Groups (Standard)

- Two-group comparison: N = n × 2

- Three-group comparison: N = n × 3

- Unequal allocation (2:1): N = n × 1.5

-

Crossover Design

- Sample size reduction: 50-75% of parallel design

- Washout period required: ≥5 half-lives

- Carryover effect testing: Additional 10-20% sample

-

Cluster Randomized Trials

- Design effect: 1 + (m-1) × ICC

- m = average cluster size

- ICC = intracluster correlation (0.01-0.05 typical)

- Sample inflation: 150-400% of individual randomization

| Study Type | Base Formula | Adjustment Factor | Final Sample Size |

|---|---|---|---|

| Two-group parallel | n per group | × 2 | 2n total |

| Crossover design | n per group | × 0.5-0.75 | 0.5-0.75n total |

| Cluster RCT | n per group | × (1 + (m-1)ICC) | 1.5-4n total |

| Non-inferiority | n per group | × 1.2-1.8 | 2.4-3.6n total |

| Equivalence trial | n per group | × 1.5-2.5 | 3-5n total |

💡 Master This: The design effect in cluster trials: A primary care study with 20 patients per clinic and ICC = 0.03 requires 1 + (20-1) × 0.03 = 1.57x more participants than individual randomization.

Survival Analysis: Time-to-Event Calculations

For survival studies (time to death, disease progression, treatment failure):

$$d = \frac{(Z_{\alpha/2} + Z_\beta)^2}{(\ln(HR))^2}$$

Where:

- d = number of events required

- HR = hazard ratio (target effect size)

- N = d / (p × f) where p = event probability, f = follow-up fraction

⚠️ Warning: Survival trials require events, not just participants. A study targeting HR = 0.7 with 20% event rate needs ≥400 events, potentially requiring ≥2000 participants with ≥2-year follow-up.

These mathematical foundations enable precise study planning that maximizes the probability of detecting clinically meaningful effects. The next section explores how to systematically apply these formulas across different research scenarios.

🧮 Sample Size Formulas: The Mathematical Precision Engine

🎯 Clinical Application Matrix: Pattern Recognition for Study Design

Primary Outcome Pattern Recognition Framework

-

Continuous Outcomes: "Measure and Compare"

- Blood pressure reduction: t-test formula

- Pain scale improvement: ANCOVA with baseline adjustment

- Laboratory values: repeated measures design

- Sample size reduction: 20-40% with baseline correlation r ≥ 0.5

- Power increase: 30-60% with proper covariate adjustment

-

Binary Outcomes: "Success or Failure"

- Mortality rates: chi-square/Fisher's exact

- Response rates: proportion comparison

- Adverse events: relative risk calculation

- Rare events (<5%): Requires large samples (N > 1000)

- Common events (>20%): Moderate samples adequate (N = 200-500)

-

Time-to-Event: "When Will It Happen"

- Survival analysis: log-rank test

- Disease progression: Cox regression

- Treatment failure: Kaplan-Meier comparison

- Event-driven design: Power depends on events, not sample size

- Censoring impact: >50% censoring requires sample inflation

📌 Remember: OUTCOME - Observational Units Timing Censoring Objective Measurement Events. Each element determines the appropriate sample size approach.

Study Design Recognition Patterns

| Clinical Scenario | Outcome Type | Design Choice | Sample Size Approach | Power Considerations |

|---|---|---|---|---|

| Hypertension drug trial | Continuous (mmHg) | Parallel groups | t-test formula | 80% power for 5 mmHg difference |

| Cancer response rate | Binary (response) | Parallel groups | Proportion test | 90% power for 20% improvement |

| Survival comparison | Time-to-event | Parallel groups | Log-rank test | Event-driven (300+ deaths) |

| Pain management | Continuous (VAS) | Crossover | Paired t-test | 50% sample reduction possible |

| Primary care intervention | Binary (control) | Cluster RCT | Design effect × 2-4 | ICC adjustment critical |

Effect Size Recognition: Clinical Significance Thresholds

-

Small Effects (d = 0.2): Require large samples (N > 1000)

- Blood pressure: 2-3 mmHg reduction

- Cholesterol: 5-10% reduction

- Quality of life: 0.2-0.3 point improvement

-

Medium Effects (d = 0.5): Moderate samples (N = 200-500)

- Blood pressure: 5-8 mmHg reduction

- Cholesterol: 15-25% reduction

- Pain scores: 1-2 point improvement

-

Large Effects (d = 0.8): Small samples sufficient (N = 50-200)

- Blood pressure: >10 mmHg reduction

- Cholesterol: >30% reduction

- Pain scores: >3 point improvement

💡 Master This: The clinical significance paradox: Smaller, more realistic effect sizes require exponentially larger samples. A 2 mmHg blood pressure reduction (clinically meaningful for population health) requires 4x more participants than a 4 mmHg reduction.

Superiority vs. Non-Inferiority Pattern Recognition

-

Superiority Trials: "Is new treatment better?"

- Standard sample size formulas apply

- Two-sided testing typical

- Effect size = meaningful clinical improvement

-

Non-Inferiority Trials: "Is new treatment not worse?"

- Sample size inflation: 20-80% increase

- One-sided testing with margin

- Non-inferiority margin = 25-50% of active control effect

-

Equivalence Trials: "Are treatments the same?"

- Largest sample sizes required

- Two one-sided tests (TOST)

- Equivalence margin = ±20% of reference effect

These pattern recognition frameworks enable rapid identification of appropriate sample size approaches for diverse clinical research scenarios. The next section examines how to systematically compare and discriminate between different power calculation methods.

🎯 Clinical Application Matrix: Pattern Recognition for Study Design

🔬 Power Calculation Discrimination: Advanced Statistical Architecture

Power Calculation Method Discrimination Matrix

| Method | Best Application | Sample Size Impact | Power Characteristics | Critical Limitations |

|---|---|---|---|---|

| t-test (unpaired) | Independent groups, continuous | Baseline standard | 80% power, normal distribution | Assumes equal variances |

| t-test (paired) | Before/after, crossover | 50-75% reduction | High power with correlation | Requires paired observations |

| Mann-Whitney U | Non-normal distributions | 15% inflation | Robust to outliers | Lower power than t-test |

| Chi-square test | Independent proportions | Standard for binary | Good for balanced groups | Poor for small expected counts |

| Fisher's exact | Small samples, rare events | No inflation needed | Exact p-values | Computationally intensive |

| Log-rank test | Survival comparisons | Event-driven calculation | Handles censoring well | Assumes proportional hazards |

Distribution Assumptions and Power Impact

-

Normal Distribution Requirements

- t-tests: Robust to mild violations with N ≥ 30

- ANOVA: Central limit theorem applies with N ≥ 15 per group

- Regression: Residuals must be normal, not raw data

- Skewed data: 10-20% power loss

- Heavy tails: 15-30% power loss

- Outliers: 20-50% power loss

-

Non-Parametric Alternatives

- Mann-Whitney U: 95% efficiency vs t-test for normal data

- Wilcoxon signed-rank: 95% efficiency vs paired t-test

- Kruskal-Wallis: 95% efficiency vs one-way ANOVA

- Sample size inflation: 5-15% for normal data

- Power advantage: 20-40% for skewed data

Correlation Structure Impact on Power

-

High Correlation (r ≥ 0.7)

- Baseline-outcome correlation in chronic diseases

- Sample size reduction: 50-75%

- Power increase: 100-200%

- Examples: Blood pressure, cholesterol, pain scores

-

Moderate Correlation (r = 0.3-0.7)

- Typical biomarker correlations

- Sample size reduction: 20-40%

- Power increase: 30-60%

- Examples: Inflammatory markers, quality of life

-

Low Correlation (r < 0.3)

- Behavioral outcomes, complex interventions

- Sample size reduction: <20%

- Power increase: <30%

- Examples: Adherence measures, patient satisfaction

⭐ Clinical Pearl: Baseline adjustment in randomized trials increases power even when groups are balanced. A hypertension trial with baseline systolic BP correlation r = 0.8 gains 150% power through ANCOVA vs simple t-test comparison.

Multiple Comparison Adjustments

-

Bonferroni Correction

- Conservative approach: α/k where k = comparisons

- Power reduction: Severe for multiple endpoints

- Sample size inflation: 50-200% for k = 5-10 comparisons

-

False Discovery Rate (FDR)

- Less conservative: Controls proportion of false discoveries

- Power preservation: Better than Bonferroni

- Sample size inflation: 20-50% for multiple comparisons

-

Hierarchical Testing

- Primary endpoint: Full α = 0.05

- Secondary endpoints: Conditional on primary success

- Power optimization: No inflation for primary outcome

💡 Master This: The multiple comparison dilemma: Testing 5 secondary endpoints with Bonferroni correction requires α = 0.01 per test, inflating sample size by ≈80%. Hierarchical testing preserves full power for primary endpoint while controlling family-wise error.

Interim Analysis Power Considerations

-

Group Sequential Designs

- Alpha spending: Distributed across analyses

- Sample size inflation: 5-15% for 2-3 interim looks

- Early stopping: Efficacy or futility boundaries

-

Adaptive Sample Size Re-estimation

- Blinded re-estimation: No alpha penalty

- Unblinded re-estimation: Requires alpha adjustment

- Sample size increase: Up to 2-3x original calculation

These discrimination frameworks enable precise selection of power calculation methods that optimize study efficiency while maintaining statistical rigor. The next section explores evidence-based treatment algorithms for common power calculation scenarios.

🔬 Power Calculation Discrimination: Advanced Statistical Architecture

⚖️ Evidence-Based Power Optimization: Treatment Algorithm Mastery

Power Optimization Treatment Algorithm

Evidence-Based Design Optimization Strategies

-

Outcome Measurement Precision Enhancement

- Strategy: Reduce measurement error through standardization

- Evidence: 50% error reduction = 75% sample size reduction

- Implementation:

- Automated measurements: 20-40% error reduction

- Trained assessors: 30-50% error reduction

- Multiple measurements: √k error reduction for k measurements

- Success Rate: 85-95% power achievement with 30-60% fewer participants

-

Baseline Covariate Optimization

- Strategy: Include prognostic baseline variables

- Evidence: R² = 0.5 baseline correlation = 50% sample reduction

- Implementation:

- Baseline outcome measurement: r = 0.6-0.9 typical

- Prognostic biomarkers: r = 0.3-0.7 range

- Demographic stratification: r = 0.2-0.5 improvement

- Success Rate: 90-95% power achievement with 20-50% sample reduction

Treatment Algorithm by Study Phase

| Study Phase | Power Target | Optimization Strategy | Sample Size Range | Success Metrics |

|---|---|---|---|---|

| Phase I | 70-80% | Safety run-in design | 20-100 total | MTD identification: 90% |

| Phase II | 80-85% | Simon two-stage | 50-300 total | Activity signal: 85% |

| Phase III | 90-95% | Adaptive enrichment | 500-5000 total | Regulatory approval: 60% |

| Phase IV | 80-90% | Pragmatic design | 1000-50000 total | Real-world effectiveness: 75% |

Power Optimization Evidence Base

-

Crossover Design Efficiency

- Meta-analysis evidence: 65% average sample reduction vs parallel groups

- Optimal conditions: Chronic stable conditions, reversible treatments

- Washout requirements: ≥5 half-lives for <10% carryover effect

- Success rate: 90% power achievement in 75% of appropriate applications

-

Cluster Randomization Optimization

- ICC minimization strategies:

- Individual-level outcomes: ICC = 0.01-0.03

- Provider-level outcomes: ICC = 0.05-0.15

- Facility-level outcomes: ICC = 0.10-0.30

- Cluster size optimization: 15-25 participants per cluster optimal

- Design effect reduction: Stratified randomization reduces ICC by 20-40%

- ICC minimization strategies:

⭐ Clinical Pearl: Stepped-wedge cluster designs require 2-3x more clusters than parallel cluster RCTs but provide within-cluster comparisons that reduce ICC impact by 50-70%.

Adaptive Power Optimization Protocols

-

Blinded Sample Size Re-estimation

- Timing: 50% enrollment completion

- Parameters: Pooled variance re-estimation only

- Alpha penalty: None (maintains Type I error)

- Sample increase limit: 2-3x original calculation

- Success rate: 95% achieve target power vs 75% fixed designs

-

Unblinded Interim Analysis

- Alpha spending function: O'Brien-Fleming boundaries

- Efficacy stopping: Z > 4.33 at first interim (50% enrollment)

- Futility stopping: Conditional power <20%

- Sample size increase: Up to 100% with alpha adjustment

- Overall power: 90-95% with 15-25% average sample inflation

Resource Optimization Evidence

-

Cost-Effectiveness Analysis

- Fixed design cost: $50,000-500,000 per 100 participants

- Adaptive design premium: 10-20% additional cost

- Power failure cost: Total study loss ($1M-100M)

- ROI calculation: Adaptive designs provide 3-5x ROI through power assurance

-

Timeline Optimization

- Recruitment acceleration: Adaptive enrichment reduces timeline by 20-40%

- Interim analysis timing: Every 6-12 months optimal

- Decision speed: 2-4 weeks for interim analysis completion

- Regulatory acceptance: >90% FDA approval for adaptive protocols

💡 Master This: The power optimization paradox: Spending 10-20% more on adaptive design methodology prevents 100% loss from underpowered studies. The expected value calculation: (0.9 × Study Success Value) - (0.1 × Total Study Cost) > Fixed Design Expected Value.

These evidence-based optimization algorithms ensure maximum statistical efficiency while maintaining regulatory acceptability and scientific rigor. The next section integrates these approaches into comprehensive multi-system frameworks for complex research scenarios.

⚖️ Evidence-Based Power Optimization: Treatment Algorithm Mastery

🔗 Multi-System Integration: Advanced Power Architecture

Integrated Power Planning Across Research Phases

-

Phase I → Phase II Power Transition

- Safety run-in data: Informs Phase II sample size through adverse event rates

- Pharmacokinetic modeling: PK/PD relationships predict optimal dosing for Phase II

- Biomarker discovery: Phase I biomarkers become Phase II stratification factors

- Power inheritance: Phase I effect size estimates provide Phase II planning data

-

Phase II → Phase III Power Scaling

- Effect size refinement: Phase II provides unbiased effect estimates for Phase III

- Population enrichment: Phase II subgroup analyses identify Phase III target populations

- Endpoint validation: Phase II surrogate endpoints validate Phase III primary outcomes

- Sample size inflation: Phase III requires 3-10x Phase II sample for regulatory approval

Regulatory Integration Framework

| Regulatory Body | Power Requirements | Sample Size Impact | Evidence Standards | Success Rates |

|---|---|---|---|---|

| FDA (US) | 90% power minimum | Large samples required | 2 pivotal trials | 60% approval |

| EMA (Europe) | 80-90% power | Moderate samples | 1-2 pivotal trials | 65% approval |

| PMDA (Japan) | 80% power | Population-specific | Bridging studies | 70% approval |

| Health Canada | 80% power | Follows FDA/EMA | Harmonized approach | 65% approval |

| NICE (UK) | Cost-effectiveness | Economic modeling | Real-world evidence | 45% approval |

Multi-Endpoint Power Architecture

-

Hierarchical Testing Strategy

- Primary endpoint: Full α = 0.05, 90% power

- Key secondary: Conditional testing after primary success

- Exploratory endpoints: No alpha control, descriptive analysis

- Safety endpoints: Separate alpha allocation, 80% power for serious AEs

-

Composite Endpoint Optimization

- Component weighting: Clinical importance × event frequency

- Power calculation: Most frequent component drives sample size

- Interpretation challenges: Individual components may not reach significance

- Regulatory acceptance: Clinically meaningful components required

Advanced Integration Strategies

-

Platform Trial Architecture

- Shared control arm: 50-70% sample size reduction across multiple treatments

- Adaptive randomization: Response-adaptive allocation to promising arms

- Seamless Phase II/III: Combined analysis with alpha preservation

- Power efficiency: 2-3x more efficient than separate trials

-

Basket Trial Design

- Histology-independent: Biomarker-driven patient selection

- Borrowing strength: Bayesian methods share information across tumor types

- Power calculation: Complex hierarchical modeling required

- Regulatory pathway: Tissue-agnostic approvals possible

⭐ Clinical Pearl: Master protocols (platform, basket, umbrella trials) achieve 90% power with 40-60% fewer total participants than separate trials through intelligent design integration and adaptive features.

Real-World Evidence Integration

-

Pragmatic Trial Design

- Broad inclusion criteria: Real-world populations vs selected cohorts

- Usual care comparators: Standard practice vs placebo controls

- Effectiveness outcomes: Patient-relevant endpoints vs surrogate measures

- Power considerations: Larger effect sizes needed due to increased variability

-

Registry-Based Randomized Trials

- Existing infrastructure: 50-80% cost reduction vs traditional trials

- Large sample sizes: 10,000-100,000 participants feasible

- Long-term follow-up: Years to decades of outcome data

- Power advantages: Small effect detection possible with massive samples

Economic Integration Framework

-

Cost-Effectiveness Power Calculations

- ICER thresholds: $50,000-150,000 per QALY in US/Europe

- Budget impact: Total healthcare spending implications

- Uncertainty analysis: Probabilistic sensitivity analysis for power estimation

- Value-based endpoints: Quality-adjusted outcomes require specialized power calculations

-

Resource Optimization Models

- Fixed budget constraints: Maximize power within budget limits

- Variable cost structures: Adaptive designs optimize cost-power trade-offs

- Opportunity cost: Alternative research investments consideration

- Portfolio optimization: Multiple studies with shared resources

💡 Master This: The integrated power ecosystem: Statistical power × Regulatory acceptance × Clinical meaningfulness × Economic viability = Successful drug development. Each component requires ≥80% probability for overall success >40%.

This multi-system integration framework enables comprehensive power planning that accounts for the complex interdependencies between statistical requirements, regulatory pathways, clinical significance, and resource constraints. The final section synthesizes these concepts into practical mastery tools for immediate clinical research application.

🔗 Multi-System Integration: Advanced Power Architecture

🎯 Power Mastery Arsenal: Clinical Research Command Center

Essential Power Calculation Arsenal

📌 Remember: POWER - Precision Optimizes Winning Every Research. Master these core formulas and you possess the foundation for any clinical trial design.

Core Sample Size Formulas:

- Two-sample t-test: $n = \frac{2\sigma^2(Z_{\alpha/2} + Z_\beta)^2}{(\mu_1 - \mu_2)^2}$

- Proportion comparison: $n = \frac{(Z_{\alpha/2}\sqrt{2\bar{p}(1-\bar{p})} + Z_\beta\sqrt{p_1(1-p_1) + p_2(1-p_2)})^2}{(p_1 - p_2)^2}$

- Survival analysis: $d = \frac{(Z_{\alpha/2} + Z_\beta)^2}{(\ln(HR))^2}$

Critical Power Values Quick Reference

| Power Level | Z_β Value | Clinical Application | Sample Size Impact |

|---|---|---|---|

| 70% | 0.52 | Pilot studies | Baseline |

| 80% | 0.84 | Standard trials | +28% vs 70% |

| 85% | 1.04 | Important outcomes | +56% vs 70% |

| 90% | 1.28 | Regulatory trials | +100% vs 70% |

| 95% | 1.64 | Critical decisions | +200% vs 70% |

-

Cohen's d Interpretation

- d = 0.2: Small effect - Blood pressure 2-3 mmHg, requires N ≈ 800

- d = 0.5: Medium effect - Blood pressure 5-7 mmHg, requires N ≈ 130

- d = 0.8: Large effect - Blood pressure >10 mmHg, requires N ≈ 50

-

Clinical Significance Thresholds

- Hypertension: ≥5 mmHg systolic reduction

- Cholesterol: ≥15% LDL reduction

- Pain scales: ≥1.5 points on 10-point scale

- Quality of life: ≥0.5 SD improvement

- Mortality: ≥20% relative risk reduction

⭐ Clinical Pearl: The power-sample size relationship is quadratic: Doubling power requires 4x sample size. Moving from 80% to 90% power increases sample size by 100%, while 90% to 95% power adds another 100%.

Rapid Decision Framework

Design Effect Multipliers

- Parallel Groups: ×1.0 (baseline)

- Crossover Design: ×0.5-0.75 (correlation-dependent)

- Cluster RCT: ×(1 + (m-1)×ICC) where m = cluster size

- Non-inferiority: ×1.2-1.8 (margin-dependent)

- Equivalence: ×1.5-2.5 (margin-dependent)

- Multiple comparisons: ×1.2-3.0 (correction method-dependent)

Power Optimization Checklist

-

Measurement Precision Enhancement

- Standardized protocols reduce error by 30-50%

- Automated measurements reduce error by 20-40%

- Multiple measurements reduce error by √k factor

- Trained assessors reduce error by 25-45%

-

Design Efficiency Maximization

- Baseline covariate adjustment: 20-50% sample reduction

- Stratified randomization: 10-30% power increase

- Adaptive sample size: 20-40% efficiency gain

- Interim analyses: 5-15% sample inflation acceptable

💡 Master This: The power optimization hierarchy: Effect size > Sample size > Alpha level > Power target. Increasing effect size through better measurement has 4x more impact than increasing sample size.

Emergency Power Calculations

Quick Approximations for Immediate Decisions:

- Two-group comparison (80% power, α=0.05): N ≈ 16/d² per group

- Proportion comparison: N ≈ 16p(1-p)/Δ² per group

- Survival analysis: Events ≈ 16/ln(HR)²

Sample Size Inflation Factors:

- 10% dropout rate: ×1.11

- 20% dropout rate: ×1.25

- 30% dropout rate: ×1.43

Critical Success Thresholds

⚠️ Warning: Underpowered studies waste resources and may miss clinically important effects. 80% power means 1 in 5 real effects will be missed - unacceptable for life-saving interventions.

Power Adequacy Standards:

- Pilot studies: ≥70% power acceptable

- Phase II trials: ≥80% power required

- Phase III trials: ≥90% power preferred

- Regulatory submissions: ≥90% power expected

- Safety endpoints: ≥80% power for serious adverse events

This power mastery arsenal provides the essential tools for rapid, accurate sample size calculations and power optimization across all clinical research scenarios. Master these frameworks, and you possess the statistical foundation for successful clinical trial design and execution.

🎯 Power Mastery Arsenal: Clinical Research Command Center

Practice Questions: Power and sample size

Test your understanding with these related questions

A 21-year-old man presents to the office for a follow-up visit. He was recently diagnosed with type 1 diabetes mellitus after being hospitalized for diabetic ketoacidosis following a respiratory infection. He is here today to discuss treatment options available for his condition. The doctor mentions a recent study in which researchers have developed a new version of the insulin pump that appears efficacious in type 1 diabetics. They are currently comparing it to insulin injection therapy. This new pump is not yet available, but it looks very promising. At what stage of clinical trials is this current treatment most likely at?