Multiple comparison problem US Medical PG Practice Questions and MCQs

Practice US Medical PG questions for Multiple comparison problem. These multiple choice questions (MCQs) cover important concepts and help you prepare for your exams.

Multiple comparison problem US Medical PG Question 1: A randomized double-blind controlled trial is conducted on the efficacy of 2 different ACE-inhibitors. The null hypothesis is that both drugs will be equivalent in their blood-pressure-lowering abilities. The study concluded, however, that Medication 1 was more efficacious in lowering blood pressure than medication 2 as determined by a p-value < 0.01 (with significance defined as p ≤ 0.05). Which of the following statements is correct?

- A. We can accept the null hypothesis.

- B. We can reject the null hypothesis. (Correct Answer)

- C. This trial did not reach statistical significance.

- D. There is a 0.1% chance that medication 2 is superior.

- E. There is a 10% chance that medication 1 is superior.

Multiple comparison problem Explanation: ***We can reject the null hypothesis.***

- A **p-value < 0.01** indicates that the observed difference is **statistically significant** at the **α = 0.05 level**, meaning there is strong evidence against the null hypothesis.

- When a result is statistically significant (p < α), we **reject the null hypothesis**. This is the standard statistical terminology for concluding that the observed effect is unlikely to be due to chance alone.

*We can accept the null hypothesis.*

- A **p-value < 0.01** is **less than the significance level of 0.05**, providing strong evidence to **reject the null hypothesis**, not accept it.

- Accepting the null hypothesis would imply there's no treatment effect, which contradicts the study's finding that Medication 1 was more efficacious.

- Note: In hypothesis testing, we never truly "accept" the null hypothesis; we either reject it or fail to reject it.

*This trial did not reach statistical significance.*

- The trial **did reach statistical significance** because the **p-value (p < 0.01) is less than the defined significance level (p ≤ 0.05)**.

- A p-value of 0.01 indicates a 1% chance that the observed results occurred by random chance if the null hypothesis were true.

*There is a 0.1% chance that medication 2 is superior.*

- The p-value of **p < 0.01** relates to the probability of observing the data (or more extreme data) given the null hypothesis is true, not the probability of one medication being superior.

- It does not directly provide the probability of Medication 2 being superior; rather, it indicates the **unlikelihood of the observed difference** if no true difference exists.

*There is a 10% chance that medication 1 is superior.*

- A **p-value of < 0.01** means there is **less than a 1% chance** of observing such a result if the null hypothesis (no difference) were true, not a 10% chance of superiority.

- The p-value represents the probability of observing the data, or more extreme data, assuming the **null hypothesis is true**, not the probability that one treatment is superior.

Multiple comparison problem US Medical PG Question 2: A surgeon is interested in studying how different surgical techniques impact the healing of tendon injuries. In particular, he will compare 3 different types of suture repairs biomechanically in order to determine the maximum load before failure of the tendon 2 weeks after repair. He collects data on maximum load for 90 different repaired tendons from an animal model. Thirty tendons were repaired using each of the different suture techniques. Which of the following statistical measures is most appropriate for analyzing the results of this study?

- A. Chi-squared

- B. Wilcoxon rank sum

- C. Pearson r coefficient

- D. Student t-test

- E. ANOVA (Correct Answer)

Multiple comparison problem Explanation: ***ANOVA***

- **ANOVA (Analysis of Variance)** is appropriate here because it compares the means of **three or more independent groups** (the three different suture techniques) on a continuous dependent variable (maximum load before failure).

- The study has three distinct repair techniques, each with 30 tendons, making ANOVA suitable for determining if there are statistically significant differences among their mean failure loads.

*Chi-squared*

- The **Chi-squared test** is used for analyzing **categorical data** (frequencies or proportions) to determine if there is an association between two nominal variables.

- This study involves quantitative measurement (maximum load), not categorical data, making Chi-squared inappropriate.

*Wilcoxon rank sum*

- The **Wilcoxon rank sum test** (also known as Mann-Whitney U test) is a **non-parametric test** used to compare two independent groups when the data is not normally distributed or is ordinal.

- While the study has independent groups, it involves three groups, and the dependent variable is continuous, making ANOVA a more powerful and appropriate choice assuming normal distribution.

*Pearson r coefficient*

- The **Pearson r coefficient** measures the **strength and direction of a linear relationship between two continuous variables**.

- This study aims to compare means across different groups, not to determine the correlation between two continuous variables.

*Student t-test*

- The **Student t-test** is used to compare the means of **exactly two groups** (either independent or paired) on a continuous dependent variable.

- This study involves comparing three different suture techniques, not just two, making the t-test unsuitable.

Multiple comparison problem US Medical PG Question 3: A researcher is trying to determine whether a newly discovered substance X can be useful in promoting wound healing after surgery. She conducts this study by enrolling the next 100 patients that will be undergoing this surgery and separating them into 2 groups. She decides which patient will be in which group by using a random number generator. Subsequently, she prepares 1 set of syringes with the novel substance X and 1 set of syringes with a saline control. Both of these sets of syringes are unlabeled and the substances inside cannot be distinguished. She gives the surgeon performing the surgery 1 of the syringes and does not inform him nor the patient which syringe was used. After the study is complete, she analyzes all the data that was collected and performs statistical analysis. This study most likely provides which level of evidence for use of substance X?

- A. Level 3

- B. Level 1 (Correct Answer)

- C. Level 4

- D. Level 5

- E. Level 2

Multiple comparison problem Explanation: ***Level 1***

- The study design described is a **randomized controlled trial (RCT)**, which is considered the **highest level of evidence (Level 1)** in the hierarchy of medical evidence.

- Key features like **randomization**, **control group**, and **blinding (double-blind)** help minimize bias and strengthen the validity of the findings.

*Level 2*

- Level 2 evidence typically comprises **well-designed controlled trials without randomization** (non-randomized controlled trials) or **high-quality cohort studies**.

- While strong, they do not possess the same level of internal validity as randomized controlled trials.

*Level 3*

- Level 3 evidence typically includes **case-control studies** or **cohort studies**, which are observational designs and carry a higher risk of bias compared to RCTs.

- These studies generally do not involve randomization or intervention assignment by the researchers.

*Level 4*

- Level 4 evidence is usually derived from **case series** or **poor quality cohort and case-control studies**.

- These studies provide descriptive information or investigate associations without strong control for confounding factors.

*Level 5*

- Level 5 evidence is the **lowest level of evidence**, consisting of **expert opinion** or **animal research/bench research**.

- This level lacks human clinical data or systematic investigative rigor needed for higher evidence levels.

Multiple comparison problem US Medical PG Question 4: An investigator is measuring the blood calcium level in a sample of female cross country runners and a control group of sedentary females. If she would like to compare the means of the two groups, which statistical test should she use?

- A. Chi-square test

- B. Linear regression

- C. t-test (Correct Answer)

- D. ANOVA (Analysis of Variance)

- E. F-test

Multiple comparison problem Explanation: ***t-test***

- A **t-test** is appropriate for comparing the means of two independent groups, such as the blood calcium levels between runners and sedentary females.

- It assesses whether the observed difference between the two sample means is statistically significant or occurred by chance.

*Chi-square test*

- The **chi-square test** is used to analyze categorical data to determine if there is a significant association between two variables.

- It is not suitable for comparing continuous variables like blood calcium levels.

*Linear regression*

- **Linear regression** is used to model the relationship between a dependent variable (outcome) and one or more independent variables (predictors).

- It aims to predict the value of a variable based on the value of another, rather than comparing means between groups.

*ANOVA (Analysis of Variance)*

- **ANOVA** is used to compare the means of **three or more independent groups**.

- Since there are only two groups being compared in this scenario, a t-test is more specific and appropriate.

*F-test*

- The **F-test** is primarily used to compare the variances of two populations or to assess the overall significance of a regression model.

- While it is the basis for ANOVA, it is not the direct test for comparing the means of two groups.

Multiple comparison problem US Medical PG Question 5: A research group wants to assess the safety and toxicity profile of a new drug. A clinical trial is conducted with 20 volunteers to estimate the maximum tolerated dose and monitor the apparent toxicity of the drug. The study design is best described as which of the following phases of a clinical trial?

- A. Phase 0

- B. Phase III

- C. Phase V

- D. Phase II

- E. Phase I (Correct Answer)

Multiple comparison problem Explanation: ***Phase I***

- **Phase I clinical trials** involve a small group of healthy volunteers (typically 20-100) to primarily assess **drug safety**, determine a safe dosage range, and identify side effects.

- The main goal is to establish the **maximum tolerated dose (MTD)** and evaluate the drug's pharmacokinetic and pharmacodynamic profiles.

*Phase 0*

- **Phase 0 trials** are exploratory studies conducted in a very small number of subjects (10-15) to gather preliminary data on a drug's **pharmacodynamics and pharmacokinetics** in humans.

- They involve microdoses, not intended to have therapeutic effects, and thus cannot determine toxicity or MTD.

*Phase III*

- **Phase III trials** are large-scale studies involving hundreds to thousands of patients to confirm the drug's **efficacy**, monitor side effects, compare it to standard treatments, and collect information that will allow the drug to be used safely.

- These trials are conducted after safety and initial efficacy have been established in earlier phases.

*Phase V*

- "Phase V" is not a standard, recognized phase in the traditional clinical trial classification (Phase 0, I, II, III, IV).

- This term might be used in some non-standard research contexts or for post-marketing studies that go beyond Phase IV surveillance, but it is not a formal phase for initial drug development.

*Phase II*

- **Phase II trials** involve several hundred patients with the condition the drug is intended to treat, focusing on **drug efficacy** and further evaluating safety.

- While safety is still monitored, the primary objective shifts to determining if the drug works for its intended purpose and at what dose.

Multiple comparison problem US Medical PG Question 6: A pharmaceutical corporation is developing a research study to evaluate a novel blood test to screen for breast cancer. They enrolled 800 patients in the study, half of which have breast cancer. The remaining enrolled patients are age-matched controls who do not have the disease. Of those in the diseased arm, 330 are found positive for the test. Of the patients in the control arm, only 30 are found positive. What is this test’s sensitivity?

- A. 330 / (330 + 30)

- B. 330 / (330 + 70) (Correct Answer)

- C. 370 / (30 + 370)

- D. 370 / (70 + 370)

- E. 330 / (400 + 400)

Multiple comparison problem Explanation: ***330 / (330 + 70)***

- **Sensitivity** measures the proportion of actual **positives** that are correctly identified as such.

- In this study, there are **400 diseased patients** (half of 800). Of these, 330 tested positive (true positives), meaning 70 tested negative (false negatives). So sensitivity is **330 / (330 + 70)**.

*330 / (330 + 30)*

- This calculation represents the **positive predictive value**, which is the probability that subjects with a positive screening test truly have the disease. It uses **true positives / (true positives + false positives)**.

- It does not correctly calculate **sensitivity**, which requires knowing the total number of diseased individuals.

*370 / (30 + 370)*

- This expression is attempting to calculate **specificity**, which is the proportion of actual negatives that are correctly identified. It would be **true negatives / (true negatives + false positives)**.

- However, the numbers used are incorrect for specificity in this context given the data provided.

*370 / (70 + 370)*

- This formula is an incorrect combination of values and does not represent any standard epidemiological measure like **sensitivity** or **specificity**.

- It is attempting to combine false negatives (70) and true negatives (370 from control arm) in a non-standard way.

*330 / (400 + 400)*

- This calculation attempts to divide true positives by the total study population (800 patients).

- This metric represents the **prevalence of true positives within the entire study cohort**, not the test's **sensitivity**.

Multiple comparison problem US Medical PG Question 7: You submit a paper to a prestigious journal about the effects of coffee consumption on mesothelioma risk. The first reviewer lauds your clinical and scientific acumen, but expresses concern that your study does not have adequate statistical power. Statistical power refers to which of the following?

- A. The probability of detecting an association when no association exists.

- B. The probability of not detecting an association when an association does exist.

- C. The probability of detecting an association when an association does exist. (Correct Answer)

- D. The first derivative of work.

- E. The square root of the variance.

Multiple comparison problem Explanation: ***The probability of detecting an association when an association does exist.***

- **Statistical power** is defined as the probability that a study will correctly reject a false null hypothesis, meaning it will detect a true effect or association if one exists.

- A study with **adequate statistical power** is less likely to miss a real effect.

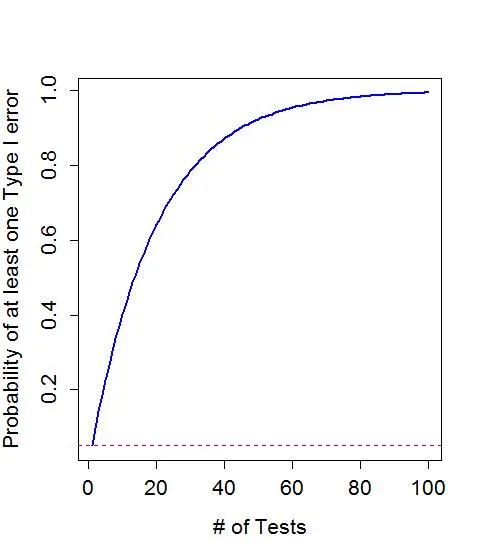

*The probability of detecting an association when no association exists.*

- This describes a **Type I error** or **false positive**, often represented by **alpha (α)**.

- It is the probability of incorrectly concluding an effect or association exists when, in reality, there is none.

*The probability of not detecting an association when an association does exist.*

- This refers to a **Type II error** or **false negative**, represented by **beta (β)**.

- **Statistical power** is calculated as **1 - β**, so this option describes the complement of power.

*The first derivative of work.*

- The first derivative of work with respect to time represents **power** in physics, which is the rate at which work is done.

- This option is a **distractor** from physics and is unrelated to statistical power in research.

*The square root of the variance.*

- The **square root of the variance** is the **standard deviation**, a measure of the dispersion or spread of data.

- This is a statistical concept but is not the definition of statistical power.

Multiple comparison problem US Medical PG Question 8: A survey was conducted in a US midwestern town in an effort to assess maternal mortality over the past year. The data from the survey are given in the table below:

Women of childbearing age 250,000

Maternal deaths 2,500

Number of live births 100, 000

Number of deaths of women of childbearing age 7,500

Maternal death is defined as the death of a woman while pregnant or within 42 days of termination of pregnancy from any cause related to or aggravated by, the pregnancy. Which of the following is the maternal mortality rate in this midwestern town?

- A. 1,000 per 100,000 live births

- B. 33 per 100,000 live births

- C. 3,000 per 100,000 live births

- D. 33,300 per 100,000 live births

- E. 2,500 per 100,000 live births (Correct Answer)

Multiple comparison problem Explanation: ***2,500 per 100,000 live births***

- The maternal mortality rate is calculated as the number of **maternal deaths** per 100,000 **live births**. The given data directly provide these values.

- Calculation: (2,500 maternal deaths / 100,000 live births) × 100,000 = **2,500 per 100,000 live births**.

*1,000 per 100,000 live births*

- This value is incorrect as it does not align with the provided numbers for maternal deaths and live births in the calculation.

- It might result from a miscalculation or using incorrect numerator/denominator values from the dataset.

*33 per 100,000 live births*

- This value is significantly lower than the correct rate and suggests a substantial error in calculation or an incorrect understanding of how the maternal mortality rate is derived.

- It could potentially result from dividing the number of live births by maternal deaths, which is the inverse of the correct formula.

*3,000 per 100,000 live births*

- This option is close to the correct answer but slightly higher, indicating a possible calculation error, for instance, including non-maternal deaths or other causes of deaths in the numerator.

- The definition of maternal death is specific to pregnancy-related or aggravated causes, so extraneous deaths would inflate the rate.

*33,300 per 100,000 live births*

- This figure results from incorrectly calculating the proportion of maternal deaths among all deaths of women of childbearing age: (2,500 / 7,500) × 100,000 = 33,333.

- This is a conceptual error as the maternal mortality rate should use live births as the denominator, not total deaths of women of childbearing age.

Multiple comparison problem US Medical PG Question 9: The height of American adults is expected to follow a normal distribution, with a typical male adult having an average height of 69 inches with a standard deviation of 0.1 inches. An investigator has been informed about a community in the American Midwest with a history of heavy air and water pollution in which a lower mean height has been reported. The investigator plans to sample 30 male residents to test the claim that heights in this town differ significantly from the national average based on heights assumed be normally distributed. The significance level is set at 10% and the probability of a type 2 error is assumed to be 15%. Based on this information, which of the following is the power of the proposed study?

- A. 0.10

- B. 0.85 (Correct Answer)

- C. 0.90

- D. 0.15

- E. 0.05

Multiple comparison problem Explanation: ***0.85***

- **Power** is defined as **1 - β**, where β is the **probability of a Type II error**.

- Given that the probability of a **Type II error (β)** is 15% or 0.15, the power of the study is 1 - 0.15 = **0.85**.

*0.10*

- This value represents the **significance level (α)**, which is the probability of committing a **Type I error** (rejecting a true null hypothesis).

- The significance level is distinct from the **power of the study**, which relates to Type II errors.

*0.90*

- This value would be the power if the **Type II error rate (β)** was 0.10 (1 - 0.10 = 0.90), but the question specifies a β of 0.15.

- It is also the complement of the significance level (1 - α), which is not the definition of power.

*0.15*

- This value is the **probability of a Type II error (β)**, not the power of the study.

- **Power** is the probability of correctly rejecting a false null hypothesis, which is 1 - β.

*0.05*

- While 0.05 is a common significance level (α), it is not given as the significance level in this question (which is 0.10).

- This value also does not represent the power of the study, which would be calculated using the **Type II error rate**.

Multiple comparison problem US Medical PG Question 10: You are reading through a recent article that reports significant decreases in all-cause mortality for patients with malignant melanoma following treatment with a novel biological infusion. Which of the following choices refers to the probability that a study will find a statistically significant difference when one truly does exist?

- A. Type II error

- B. Type I error

- C. Confidence interval

- D. p-value

- E. Power (Correct Answer)

Multiple comparison problem Explanation: ***Power***

- **Power** is the probability that a study will correctly reject the null hypothesis when it is, in fact, false (i.e., will find a statistically significant difference when one truly exists).

- A study with high power minimizes the risk of a **Type II error** (failing to detect a real effect).

*Type II error*

- A **Type II error** (or **beta error**) occurs when a study fails to reject a false null hypothesis, meaning it concludes there is no significant difference when one actually exists.

- This is the **opposite** of what the question describes, which asks for the probability of *finding* a difference.

*Type I error*

- A **Type I error** (or **alpha error**) occurs when a study incorrectly rejects a true null hypothesis, concluding there is a significant difference when one does not actually exist.

- This relates to the **p-value** and the level of statistical significance (e.g., p < 0.05).

*Confidence interval*

- A **confidence interval** provides a range of values within which the true population parameter is likely to lie with a certain degree of confidence (e.g., 95%).

- It does not directly represent the probability of finding a statistically significant difference when one truly exists.

*p-value*

- The **p-value** is the probability of observing data as extreme as, or more extreme than, that obtained in the study, assuming the null hypothesis is true.

- It is used to determine statistical significance, but it is not the probability of detecting a true effect.

More Multiple comparison problem US Medical PG questions available in the OnCourse app. Practice MCQs, flashcards, and get detailed explanations.