Common misinterpretations of p-values US Medical PG Practice Questions and MCQs

Practice US Medical PG questions for Common misinterpretations of p-values. These multiple choice questions (MCQs) cover important concepts and help you prepare for your exams.

Common misinterpretations of p-values US Medical PG Question 1: A randomized double-blind controlled trial is conducted on the efficacy of 2 different ACE-inhibitors. The null hypothesis is that both drugs will be equivalent in their blood-pressure-lowering abilities. The study concluded, however, that Medication 1 was more efficacious in lowering blood pressure than medication 2 as determined by a p-value < 0.01 (with significance defined as p ≤ 0.05). Which of the following statements is correct?

- A. We can accept the null hypothesis.

- B. We can reject the null hypothesis. (Correct Answer)

- C. This trial did not reach statistical significance.

- D. There is a 0.1% chance that medication 2 is superior.

- E. There is a 10% chance that medication 1 is superior.

Common misinterpretations of p-values Explanation: ***We can reject the null hypothesis.***

- A **p-value < 0.01** indicates that the observed difference is **statistically significant** at the **α = 0.05 level**, meaning there is strong evidence against the null hypothesis.

- When a result is statistically significant (p < α), we **reject the null hypothesis**. This is the standard statistical terminology for concluding that the observed effect is unlikely to be due to chance alone.

*We can accept the null hypothesis.*

- A **p-value < 0.01** is **less than the significance level of 0.05**, providing strong evidence to **reject the null hypothesis**, not accept it.

- Accepting the null hypothesis would imply there's no treatment effect, which contradicts the study's finding that Medication 1 was more efficacious.

- Note: In hypothesis testing, we never truly "accept" the null hypothesis; we either reject it or fail to reject it.

*This trial did not reach statistical significance.*

- The trial **did reach statistical significance** because the **p-value (p < 0.01) is less than the defined significance level (p ≤ 0.05)**.

- A p-value of 0.01 indicates a 1% chance that the observed results occurred by random chance if the null hypothesis were true.

*There is a 0.1% chance that medication 2 is superior.*

- The p-value of **p < 0.01** relates to the probability of observing the data (or more extreme data) given the null hypothesis is true, not the probability of one medication being superior.

- It does not directly provide the probability of Medication 2 being superior; rather, it indicates the **unlikelihood of the observed difference** if no true difference exists.

*There is a 10% chance that medication 1 is superior.*

- A **p-value of < 0.01** means there is **less than a 1% chance** of observing such a result if the null hypothesis (no difference) were true, not a 10% chance of superiority.

- The p-value represents the probability of observing the data, or more extreme data, assuming the **null hypothesis is true**, not the probability that one treatment is superior.

Common misinterpretations of p-values US Medical PG Question 2: Group of 100 medical students took an end of the year exam. The mean score on the exam was 70%, with a standard deviation of 25%. The professor states that a student's score must be within the 95% confidence interval of the mean to pass the exam. Which of the following is the minimum score a student can have to pass the exam?

- A. 45%

- B. 63.75%

- C. 67.5%

- D. 20%

- E. 65% (Correct Answer)

Common misinterpretations of p-values Explanation: ***65%***

- To find the **95% confidence interval (CI) of the mean**, we use the formula: Mean ± (Z-score × Standard Error). For a 95% CI, the Z-score is approximately **1.96**.

- The **Standard Error (SE)** is calculated as SD/√n, where n is the sample size (100 students). So, SE = 25%/√100 = 25%/10 = **2.5%**.

- The 95% CI is 70% ± (1.96 × 2.5%) = 70% ± 4.9%. The lower bound is 70% - 4.9% = **65.1%**, which rounds to **65%** as the minimum passing score.

*45%*

- This value is significantly lower than the calculated lower bound of the 95% confidence interval (approximately 65.1%).

- It would represent a score far outside the defined passing range.

*63.75%*

- This value falls below the calculated lower bound of the 95% confidence interval (approximately 65.1%).

- While close, this score would not meet the professor's criterion for passing.

*67.5%*

- This value is within the 95% confidence interval (65.1% to 74.9%) but is **not the minimum score**.

- Lower scores within the interval would still qualify as passing.

*20%*

- This score is extremely low and falls significantly outside the 95% confidence interval for a mean of 70%.

- It would indicate performance far below the defined passing threshold.

Common misinterpretations of p-values US Medical PG Question 3: A research team develops a new monoclonal antibody checkpoint inhibitor for advanced melanoma that has shown promise in animal studies as well as high efficacy and low toxicity in early phase human clinical trials. The research team would now like to compare this drug to existing standard of care immunotherapy for advanced melanoma. The research team decides to conduct a non-randomized study where the novel drug will be offered to patients who are deemed to be at risk for toxicity with the current standard of care immunotherapy, while patients without such risk factors will receive the standard treatment. Which of the following best describes the level of evidence that this study can offer?

- A. Level 1

- B. Level 3 (Correct Answer)

- C. Level 5

- D. Level 4

- E. Level 2

Common misinterpretations of p-values Explanation: ***Level 3***

- A **non-randomized controlled trial** like the one described, where patient assignment to treatment groups is based on specific characteristics (risk of toxicity), falls into Level 3 evidence.

- This level typically includes **non-randomized controlled trials** and **well-designed cohort studies** with comparison groups, which are prone to selection bias and confounding.

- The study compares two treatments but lacks randomization, making it Level 3 evidence.

*Level 1*

- Level 1 evidence is the **highest level of evidence**, derived from **systematic reviews and meta-analyses** of multiple well-designed randomized controlled trials or large, high-quality randomized controlled trials.

- The described study is explicitly stated as non-randomized, ruling out Level 1.

*Level 2*

- Level 2 evidence involves at least one **well-designed randomized controlled trial** (RCT) or **systematic reviews** of randomized trials.

- The current study is *non-randomized*, which means it cannot be classified as Level 2 evidence, as randomization is a key criterion for this level.

*Level 4*

- Level 4 evidence includes **case series**, **case-control studies**, and **poorly designed cohort or case-control studies**.

- While the study is non-randomized, it is a controlled comparative trial rather than a case series or retrospective case-control study, placing it at Level 3.

*Level 5*

- Level 5 evidence is the **lowest level of evidence**, typically consisting of **expert opinion** without explicit critical appraisal, or based on physiology, bench research, or animal studies.

- While the drug was initially tested in animal studies, the current human comparative study offers a higher level of evidence than expert opinion or preclinical data.

Common misinterpretations of p-values US Medical PG Question 4: You are conducting a study comparing the efficacy of two different statin medications. Two groups are placed on different statin medications, statin A and statin B. Baseline LDL levels are drawn for each group and are subsequently measured every 3 months for 1 year. Average baseline LDL levels for each group were identical. The group receiving statin A exhibited an 11 mg/dL greater reduction in LDL in comparison to the statin B group. Your statistical analysis reports a p-value of 0.052. Which of the following best describes the meaning of this p-value?

- A. There is a 95% chance that the difference in reduction of LDL observed reflects a real difference between the two groups

- B. Though A is more effective than B, there is a 5% chance the difference in reduction of LDL between the two groups is due to chance

- C. If 100 permutations of this experiment were conducted, 5 of them would show similar results to those described above

- D. This is a statistically significant result

- E. There is a 5.2% chance of observing a difference in reduction of LDL of 11 mg/dL or greater even if the two medications have identical effects (Correct Answer)

Common misinterpretations of p-values Explanation: **There is a 5.2% chance of observing a difference in reduction of LDL of 11 mg/dL or greater even if the two medications have identical effects**

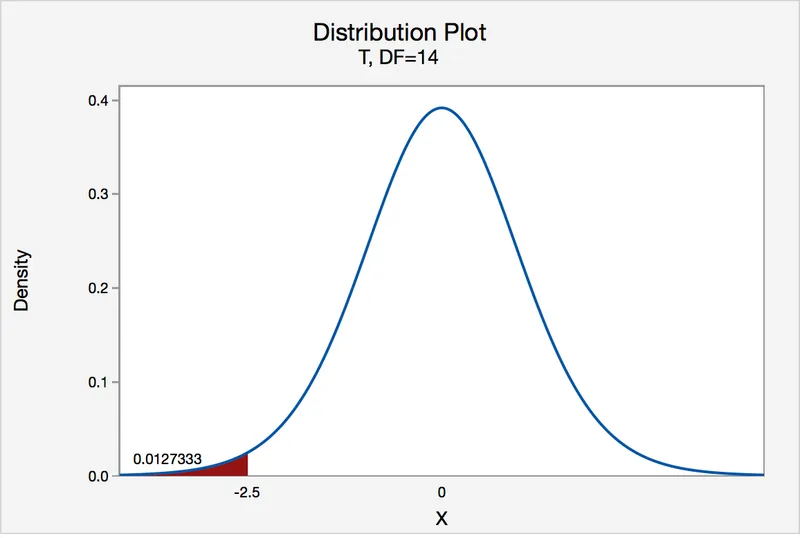

- The **p-value** represents the probability of observing results as extreme as, or more extreme than, the observed data, assuming the **null hypothesis** is true (i.e., there is no true difference between the groups).

- A p-value of 0.052 means there's approximately a **5.2% chance** that the observed 11 mg/dL difference (or a more substantial difference) occurred due to **random variation**, even if both statins were equally effective.

*There is a 95% chance that the difference in reduction of LDL observed reflects a real difference between the two groups*

- This statement is an incorrect interpretation of the p-value; it confuses the p-value with the **probability that the alternative hypothesis is true**.

- A p-value does not directly tell us the probability that the observed difference is "real" or due to the intervention being studied.

*Though A is more effective than B, there is a 5% chance the difference in reduction of LDL between the two groups is due to chance*

- This statement implies that Statin A is more effective, which cannot be concluded with a p-value of 0.052 if the significance level (alpha) was set at 0.05.

- While it's true there's a chance the difference is due to chance, claiming A is "more effective" based on this p-value before statistical significance is usually declared is misleading.

*If 100 permutations of this experiment were conducted, 5 of them would show similar results to those described above*

- This is an incorrect interpretation. The p-value does not predict the outcome of repeated experiments in this manner.

- It refers to the **probability under the null hypothesis in a single experiment**, not the frequency of results across multiple hypothetical repetitions.

*This is a statistically significant result*

- A p-value of 0.052 is generally considered **not statistically significant** if the conventional alpha level (significance level) is set at 0.05 (or 5%).

- For a result to be statistically significant at alpha = 0.05, the p-value must be **less than 0.05**.

Common misinterpretations of p-values US Medical PG Question 5: A randomized control double-blind study is conducted on the efficacy of 2 sulfonylureas. The study concluded that medication 1 was more efficacious in lowering fasting blood glucose than medication 2 (p ≤ 0.05; 95% CI: 14 [10-21]). Which of the following is true regarding a 95% confidence interval (CI)?

- A. If the same study were repeated multiple times, approximately 95% of the calculated confidence intervals would contain the true population parameter. (Correct Answer)

- B. The 95% confidence interval is the probability chosen by the researcher to be the threshold of statistical significance.

- C. When a 95% CI for the estimated difference between groups contains the value ‘0’, the results are significant.

- D. It represents the probability that chance would not produce the difference shown, 95% of the time.

- E. The study is adequately powered at the 95% confidence interval.

Common misinterpretations of p-values Explanation: ***If the same study were repeated multiple times, approximately 95% of the calculated confidence intervals would contain the true population parameter.***

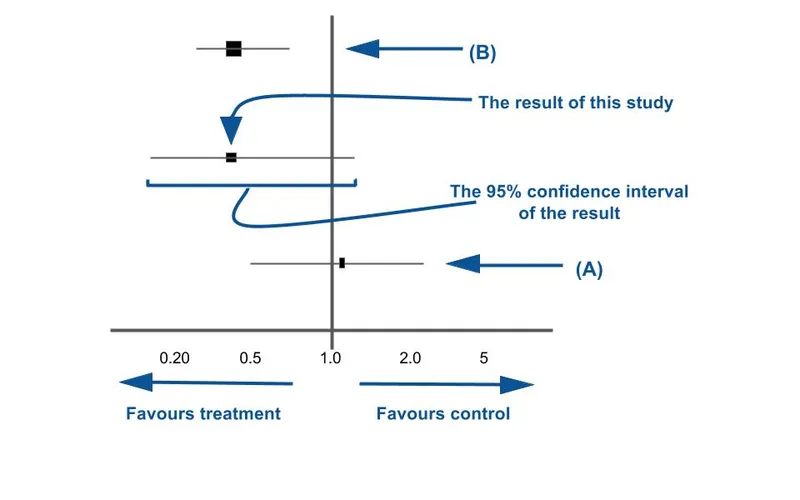

- This statement accurately defines the **frequentist interpretation** of a confidence interval (CI). It reflects the long-run behavior of the CI over hypothetical repetitions of the study.

- A 95% CI means that if you were to repeat the experiment many times, 95% of the CIs calculated from those experiments would capture the **true underlying population parameter**.

*The 95% confidence interval is the probability chosen by the researcher to be the threshold of statistical significance.*

- The **alpha level (α)**, typically set at 0.05 (or 5%), is the threshold for statistical significance (p ≤ 0.05), representing the probability of a Type I error.

- The 95% confidence level (1-α) is related to statistical significance, but it is not the *threshold* itself; rather, it indicates the **reliability** of the interval estimate.

*When a 95% CI for the estimated difference between groups contains the value ‘0’, the results are significant.*

- If a 95% CI for the difference between groups **contains 0**, it implies that there is **no statistically significant difference** between the groups at the 0.05 alpha level.

- A statistically significant difference (p ≤ 0.05) would be indicated if the 95% CI **does NOT contain 0**, suggesting that the intervention had a real effect.

*It represents the probability that chance would not produce the difference shown, 95% of the time.*

- This statement misinterprets the meaning of a CI and probability. The chance of not producing the observed difference is typically addressed by the **p-value**, not directly by the CI in this manner.

- A CI provides a **range of plausible values** for the population parameter, not a probability about the role of chance in producing the observed difference.

*The study is adequately powered at the 95% confidence interval.*

- **Statistical power** is the probability of correctly rejecting a false null hypothesis, typically set at 80% or 90%. It is primarily determined by sample size, effect size, and alpha level.

- A 95% CI is a measure of the **precision** of an estimate, while power refers to the **ability of a study to detect an effect** if one exists. They are related but distinct concepts.

Common misinterpretations of p-values US Medical PG Question 6: A researcher is conducting a study to compare fracture risk in male patients above the age of 65 who received annual DEXA screening to peers who did not receive screening. He conducts a randomized controlled trial in 900 patients, with half of participants assigned to each experimental group. The researcher ultimately finds similar rates of fractures in the two groups. He then notices that he had forgotten to include 400 patients in his analysis. Including the additional participants in his analysis would most likely affect the study's results in which of the following ways?

- A. Wider confidence intervals of results

- B. Increased probability of committing a type II error

- C. Decreased significance level of results

- D. Increased external validity of results

- E. Increased probability of rejecting the null hypothesis when it is truly false (Correct Answer)

Common misinterpretations of p-values Explanation: ***Increased probability of rejecting the null hypothesis when it is truly false***

- Including more participants increases the **statistical power** of the study, making it more likely to detect a true effect if one exists.

- A higher sample size provides a more precise estimate of the population parameters, leading to a greater ability to **reject a false null hypothesis**.

*Wider confidence intervals of results*

- A larger sample size generally leads to **narrower confidence intervals**, as it reduces the standard error of the estimate.

- Narrower confidence intervals indicate **greater precision** in the estimation of the true population parameter.

*Increased probability of committing a type II error*

- A **Type II error** (false negative) occurs when a study fails to reject a false null hypothesis.

- Increasing the sample size typically **reduces the probability of a Type II error** because it increases statistical power.

*Decreased significance level of results*

- The **significance level (alpha)** is a pre-determined threshold set by the researcher before the study begins, typically 0.05.

- It is independent of sample size and represents the **acceptable probability of committing a Type I error** (false positive).

*Increased external validity of results*

- **External validity** refers to the generalizability of findings to other populations, settings, or times.

- While a larger sample size can enhance the representativeness of the study population, external validity is primarily determined by the **sampling method** and the study's design context, not just sample size alone.

Common misinterpretations of p-values US Medical PG Question 7: An investigator is measuring the blood calcium level in a sample of female cross country runners and a control group of sedentary females. If she would like to compare the means of the two groups, which statistical test should she use?

- A. Chi-square test

- B. Linear regression

- C. t-test (Correct Answer)

- D. ANOVA (Analysis of Variance)

- E. F-test

Common misinterpretations of p-values Explanation: ***t-test***

- A **t-test** is appropriate for comparing the means of two independent groups, such as the blood calcium levels between runners and sedentary females.

- It assesses whether the observed difference between the two sample means is statistically significant or occurred by chance.

*Chi-square test*

- The **chi-square test** is used to analyze categorical data to determine if there is a significant association between two variables.

- It is not suitable for comparing continuous variables like blood calcium levels.

*Linear regression*

- **Linear regression** is used to model the relationship between a dependent variable (outcome) and one or more independent variables (predictors).

- It aims to predict the value of a variable based on the value of another, rather than comparing means between groups.

*ANOVA (Analysis of Variance)*

- **ANOVA** is used to compare the means of **three or more independent groups**.

- Since there are only two groups being compared in this scenario, a t-test is more specific and appropriate.

*F-test*

- The **F-test** is primarily used to compare the variances of two populations or to assess the overall significance of a regression model.

- While it is the basis for ANOVA, it is not the direct test for comparing the means of two groups.

Common misinterpretations of p-values US Medical PG Question 8: A research group wants to assess the safety and toxicity profile of a new drug. A clinical trial is conducted with 20 volunteers to estimate the maximum tolerated dose and monitor the apparent toxicity of the drug. The study design is best described as which of the following phases of a clinical trial?

- A. Phase 0

- B. Phase III

- C. Phase V

- D. Phase II

- E. Phase I (Correct Answer)

Common misinterpretations of p-values Explanation: ***Phase I***

- **Phase I clinical trials** involve a small group of healthy volunteers (typically 20-100) to primarily assess **drug safety**, determine a safe dosage range, and identify side effects.

- The main goal is to establish the **maximum tolerated dose (MTD)** and evaluate the drug's pharmacokinetic and pharmacodynamic profiles.

*Phase 0*

- **Phase 0 trials** are exploratory studies conducted in a very small number of subjects (10-15) to gather preliminary data on a drug's **pharmacodynamics and pharmacokinetics** in humans.

- They involve microdoses, not intended to have therapeutic effects, and thus cannot determine toxicity or MTD.

*Phase III*

- **Phase III trials** are large-scale studies involving hundreds to thousands of patients to confirm the drug's **efficacy**, monitor side effects, compare it to standard treatments, and collect information that will allow the drug to be used safely.

- These trials are conducted after safety and initial efficacy have been established in earlier phases.

*Phase V*

- "Phase V" is not a standard, recognized phase in the traditional clinical trial classification (Phase 0, I, II, III, IV).

- This term might be used in some non-standard research contexts or for post-marketing studies that go beyond Phase IV surveillance, but it is not a formal phase for initial drug development.

*Phase II*

- **Phase II trials** involve several hundred patients with the condition the drug is intended to treat, focusing on **drug efficacy** and further evaluating safety.

- While safety is still monitored, the primary objective shifts to determining if the drug works for its intended purpose and at what dose.

Common misinterpretations of p-values US Medical PG Question 9: A medical research study is beginning to evaluate the positive predictive value of a novel blood test for non-Hodgkin’s lymphoma. The diagnostic arm contains 700 patients with NHL, of which 400 tested positive for the novel blood test. In the control arm, 700 age-matched control patients are enrolled and 0 are found positive for the novel test. What is the PPV of this test?

- A. 400 / (400 + 0) (Correct Answer)

- B. 700 / (700 + 300)

- C. 400 / (400 + 300)

- D. 700 / (700 + 0)

- E. 700 / (400 + 400)

Common misinterpretations of p-values Explanation: ***400 / (400 + 0) = 1.0 or 100%***

- The **positive predictive value (PPV)** is calculated as **True Positives / (True Positives + False Positives)**.

- In this scenario, **True Positives (TP)** are the 400 patients with NHL who tested positive, and **False Positives (FP)** are 0, as no control patients tested positive.

- This gives a PPV of 400/400 = **1.0 or 100%**, indicating that all patients who tested positive actually had the disease.

*700 / (700 + 300)*

- This calculation does not align with the formula for PPV based on the given data.

- The denominator `(700+300)` suggests an incorrect combination of various patient groups.

*400 / (400 + 300)*

- The denominator `(400+300)` incorrectly includes 300, which is the number of **False Negatives** (patients with NHL who tested negative), not False Positives.

- PPV focuses on the proportion of true positives among all positive tests, not all diseased individuals.

*700 / (700 + 0)*

- This calculation incorrectly uses the total number of patients with NHL (700) as the numerator, rather than the number of positive test results in that group.

- The numerator should be the **True Positives** (400), not the total number of diseased individuals.

*700 / (400 + 400)*

- This calculation uses incorrect values for both the numerator and denominator, not corresponding to the PPV formula.

- The numerator 700 represents the total number of patients with the disease, not those who tested positive, and the denominator incorrectly sums up values that don't represent the proper PPV calculation.

Common misinterpretations of p-values US Medical PG Question 10: The height of American adults is expected to follow a normal distribution, with a typical male adult having an average height of 69 inches with a standard deviation of 0.1 inches. An investigator has been informed about a community in the American Midwest with a history of heavy air and water pollution in which a lower mean height has been reported. The investigator plans to sample 30 male residents to test the claim that heights in this town differ significantly from the national average based on heights assumed be normally distributed. The significance level is set at 10% and the probability of a type 2 error is assumed to be 15%. Based on this information, which of the following is the power of the proposed study?

- A. 0.10

- B. 0.85 (Correct Answer)

- C. 0.90

- D. 0.15

- E. 0.05

Common misinterpretations of p-values Explanation: ***0.85***

- **Power** is defined as **1 - β**, where β is the **probability of a Type II error**.

- Given that the probability of a **Type II error (β)** is 15% or 0.15, the power of the study is 1 - 0.15 = **0.85**.

*0.10*

- This value represents the **significance level (α)**, which is the probability of committing a **Type I error** (rejecting a true null hypothesis).

- The significance level is distinct from the **power of the study**, which relates to Type II errors.

*0.90*

- This value would be the power if the **Type II error rate (β)** was 0.10 (1 - 0.10 = 0.90), but the question specifies a β of 0.15.

- It is also the complement of the significance level (1 - α), which is not the definition of power.

*0.15*

- This value is the **probability of a Type II error (β)**, not the power of the study.

- **Power** is the probability of correctly rejecting a false null hypothesis, which is 1 - β.

*0.05*

- While 0.05 is a common significance level (α), it is not given as the significance level in this question (which is 0.10).

- This value also does not represent the power of the study, which would be calculated using the **Type II error rate**.

More Common misinterpretations of p-values US Medical PG questions available in the OnCourse app. Practice MCQs, flashcards, and get detailed explanations.

and another entirely above 1 (significant))

and another entirely above 1 (significant))