Sensitivity/Specificity

On this page

🎯 The Diagnostic Accuracy Arsenal: Sensitivity & Specificity Mastery

Every diagnostic test you order hinges on two fundamental questions: when disease is present, does the test catch it, and when disease is absent, does the test correctly rule it out? You'll master sensitivity and specificity as the core metrics that answer these questions, learn to navigate 2x2 tables with precision, and deploy SNOUT and SPIN strategies to make evidence-based decisions at the bedside. By integrating these tools across specialties, you'll transform from someone who orders tests into a clinician who strategically selects and interprets them to change patient outcomes.

Understanding sensitivity and specificity unlocks the logic behind every screening protocol, diagnostic algorithm, and clinical decision rule. These twin pillars of diagnostic accuracy determine whether a test excels at catching disease (sensitivity) or avoiding false alarms (specificity), fundamentally shaping how we approach patient care from emergency departments to population health screening.

📌 Remember: SNOUT & SPIN - Sensitive tests rule OUT disease when negative; SPecific tests rule IN disease when positive. High sensitivity (>95%) means negative results confidently exclude disease, while high specificity (>95%) means positive results reliably confirm disease presence.

The mathematical precision of these metrics transforms subjective clinical impressions into objective probability statements. When a highly sensitive test returns negative, the post-test probability of disease drops dramatically. Conversely, when a highly specific test returns positive, the likelihood of true disease increases substantially.

⭐ Clinical Pearl: Tests with sensitivity >99% (like D-dimer for pulmonary embolism) excel as screening tools - negative results effectively rule out disease. Tests with specificity >99% (like troponin for myocardial infarction) excel as confirmatory tools - positive results strongly suggest disease presence.

| Test Characteristic | Sensitivity Focus | Specificity Focus | Clinical Application | Threshold Value |

|---|---|---|---|---|

| Primary Strength | Catches disease | Avoids false positives | Screening vs Confirmation | >95% optimal |

| When Negative | Rules OUT disease | Limited utility | High confidence exclusion | >99% excellent |

| When Positive | Limited utility | Rules IN disease | High confidence confirmation | >99% excellent |

| False Results | False negatives ↓ | False positives ↓ | Clinical consequences vary | <5% acceptable |

| Population Use | High prevalence | Low prevalence | Epidemiological context | Variable by setting |

🎯 The Diagnostic Accuracy Arsenal: Sensitivity & Specificity Mastery

🔬 The Mathematical Foundation: 2x2 Table Mastery

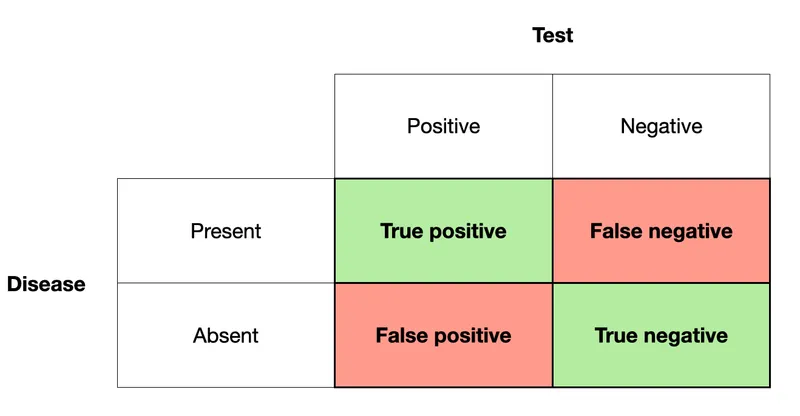

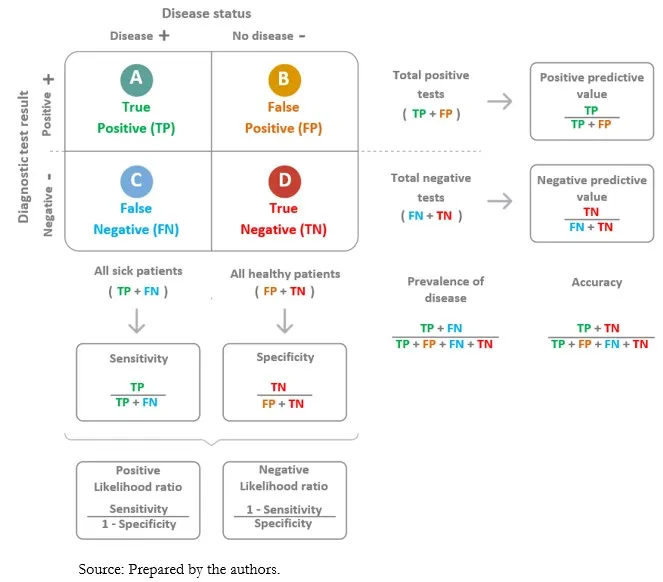

The 2x2 table represents the mathematical bedrock of diagnostic accuracy, transforming raw test results into clinically meaningful performance metrics. Every sensitivity and specificity calculation emerges from this elegant framework that categorizes all possible test outcomes against the gold standard of disease presence.

📌 Remember: TP-FN-FP-TN calculation sequence - True Positives divided by (True Positives + False Negatives) equals sensitivity. True Negatives divided by (True Negatives + False Positives) equals specificity. The denominators represent actual disease status, not test results.

Sensitivity Calculation Framework:

- Formula: TP / (TP + FN) = True Positive Rate

- Interpretation: Proportion of diseased patients correctly identified

- Clinical Meaning: Ability to detect disease when present

- Perfect Score: 100% means zero false negatives

Specificity Calculation Framework:

- Formula: TN / (TN + FP) = True Negative Rate

- Interpretation: Proportion of healthy patients correctly identified

- Clinical Meaning: Ability to exclude disease when absent

- Perfect Score: 100% means zero false positives

⭐ Clinical Pearl: In a study of 1,000 patients with 200 having disease, a test with 95% sensitivity will correctly identify 190 diseased patients but miss 10 cases (false negatives). The same test with 90% specificity will correctly identify 720 healthy patients but falsely alarm 80 cases (false positives).

The mathematical relationship between these metrics reveals fundamental trade-offs in diagnostic testing. Increasing sensitivity typically decreases specificity, creating the classic diagnostic dilemma between catching all cases versus avoiding false alarms.

💡 Master This: Every diagnostic test represents a mathematical compromise between sensitivity and specificity. Understanding this trade-off enables optimal test selection based on clinical context - prioritize sensitivity for serious diseases requiring early detection, prioritize specificity when false positives carry significant consequences.

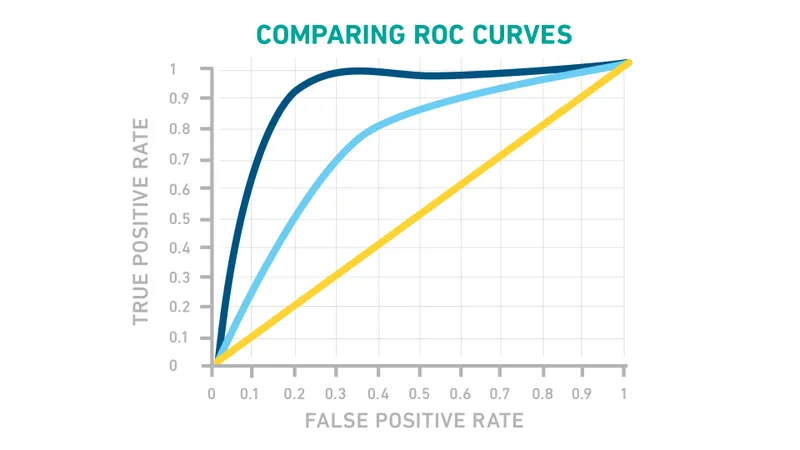

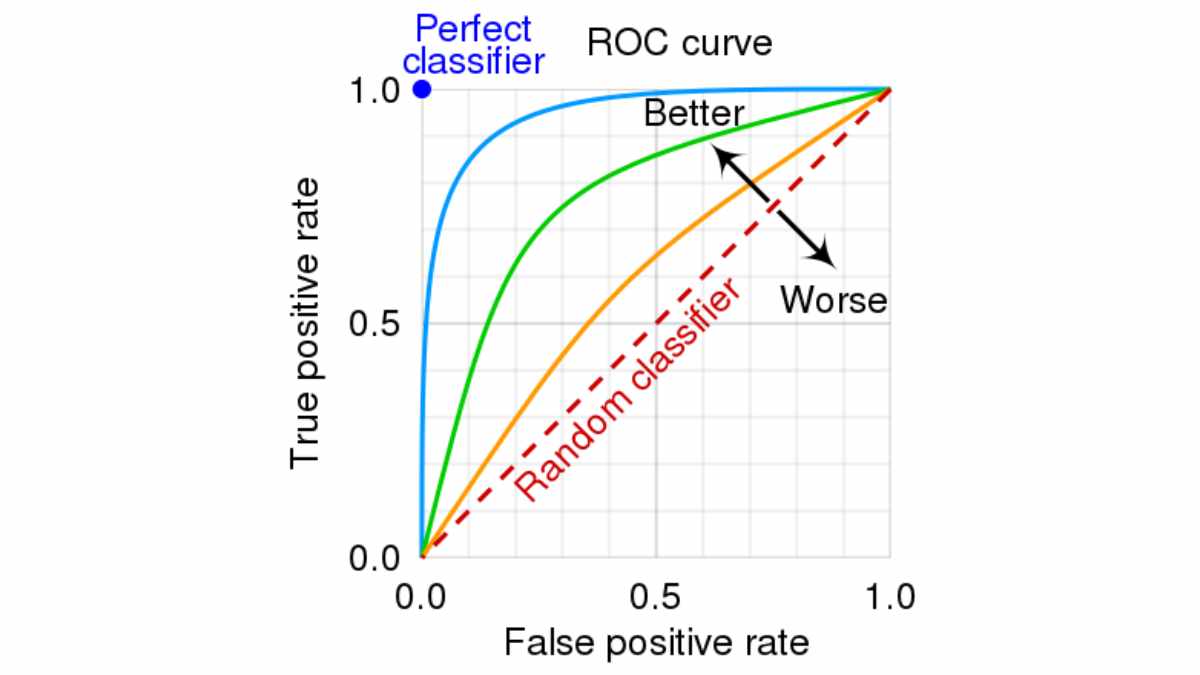

Connect these mathematical foundations through ROC curve analysis to understand how threshold manipulation affects diagnostic performance across the sensitivity-specificity spectrum.

🔬 The Mathematical Foundation: 2x2 Table Mastery

🎪 The Clinical Performance Theater: SNOUT & SPIN Strategies

The SNOUT and SPIN principles transform abstract statistical concepts into practical clinical decision-making tools. These mnemonics guide test selection based on whether the clinical priority involves ruling out serious disease or confirming suspected diagnoses with minimal false positives.

SNOUT Strategy - Sensitive Tests Rule OUT:

- Clinical Application: Screening for serious diseases

- Performance Threshold: Sensitivity >95% preferred

- Negative Result Power: High confidence disease exclusion

- Example Tests: D-dimer for PE (>99% sensitive)

- Mammography for breast cancer (>90% sensitive)

- HIV ELISA screening (>99.5% sensitive)

SPIN Strategy - Specific Tests Rule IN:

- Clinical Application: Confirming suspected diagnoses

- Performance Threshold: Specificity >95% preferred

- Positive Result Power: High confidence disease confirmation

- Example Tests: Troponin for MI (>95% specific)

- PSA for prostate cancer (>90% specific)

- Rheumatoid factor for RA (>95% specific)

📌 Remember: High-SNOUT, Low-SPIN - High sensitivity tests SNOUT disease when negative; Low false positive tests SPIN disease when positive. Choose SNOUT for screening, SPIN for confirmation.

| Clinical Scenario | Strategy Choice | Test Characteristic | Performance Target | Clinical Outcome |

|---|---|---|---|---|

| Chest Pain ED | SNOUT approach | High sensitivity | >99% for MI | Negative rules out |

| Cancer Screening | SNOUT approach | High sensitivity | >90% detection | Negative reassures |

| Surgical Planning | SPIN approach | High specificity | >95% confirmation | Positive confirms |

| Expensive Treatment | SPIN approach | High specificity | >98% certainty | Positive justifies |

| Life-Threatening Disease | SNOUT approach | High sensitivity | >99% detection | Negative excludes |

The clinical context determines optimal strategy selection. High-stakes scenarios where missing disease proves catastrophic favor SNOUT approaches, while resource-intensive interventions or treatments with significant side effects favor SPIN approaches.

💡 Master This: Clinical decision-making transforms from intuition to evidence when you match test characteristics to clinical priorities. SNOUT tests excel in screening and emergency settings; SPIN tests excel in confirmation and specialty care.

Connect these strategic frameworks through predictive value analysis to understand how disease prevalence modifies test performance in real-world populations.

🎪 The Clinical Performance Theater: SNOUT & SPIN Strategies

⚖️ The Diagnostic Discrimination Matrix: Comparative Performance Analysis

Systematic comparison of diagnostic tests reveals performance patterns that guide evidence-based test selection. Understanding relative strengths and weaknesses across different testing modalities enables optimal diagnostic strategies tailored to specific clinical scenarios and patient populations.

High-Sensitivity Test Categories:

- Screening Tests: Designed to catch all cases

- Mammography: 85-95% sensitive for breast cancer

- Pap smear: 80-90% sensitive for cervical dysplasia

- Colonoscopy: >95% sensitive for colorectal cancer

- Emergency Tests: Cannot miss critical diagnoses

- D-dimer: >99% sensitive for pulmonary embolism

- Troponin: >95% sensitive for myocardial infarction

- CT head: >98% sensitive for intracranial hemorrhage

High-Specificity Test Categories:

- Confirmatory Tests: Minimize false positives

- Biopsy: >99% specific for cancer diagnosis

- Angiography: >98% specific for coronary disease

- Genetic testing: >99% specific for hereditary conditions

- Expensive Tests: Justify resource utilization

- PET scan: >90% specific for malignancy

- Cardiac catheterization: >95% specific for CAD

- MRI: >90% specific for neurological conditions

📌 Remember: SCREEN-CONFIRM-TREAT sequence - SCREEN with sensitive tests to catch disease, CONFIRM with specific tests to verify diagnosis, TREAT based on confirmed results. Each step requires different performance characteristics.

| Test Category | Sensitivity Range | Specificity Range | Clinical Role | Cost Consideration | False Result Impact |

|---|---|---|---|---|---|

| Screening Tests | 80-95% | 70-90% | Population health | Low cost essential | False negatives critical |

| Emergency Tests | >95% | 60-80% | Acute care | Cost secondary | Missing disease catastrophic |

| Confirmatory Tests | 70-90% | >95% | Diagnosis verification | High cost justified | False positives problematic |

| Monitoring Tests | 85-95% | 85-95% | Disease tracking | Moderate cost | Both errors significant |

| Prognostic Tests | 75-90% | 80-95% | Outcome prediction | Variable cost | Impacts treatment planning |

| %%{init: {'flowchart': {'htmlLabels': true}}}%% | |||||

| flowchart TD |

Start["📋 Clinical Presentation

• Patient history• Physical exam"]

Prob["📋 Disease Probability?

• Pre-test risk• Clinical judgement"]

Sens["🔬 High Sensitivity Test

• Rule-out power• Few false negs"]

Bal["🔬 Balanced Test

• Mid-range odds• General screen"]

Spec["🔬 High Specificity Test

• Rule-in power• Few false pos"]

NegQ["📋 Negative Result?

• Evaluate findings• Check validity"]

Pat["📋 Result Pattern?

• Mixed findings• Review trends"]

PosQ["📋 Positive Result?

• Evaluate findings• Confirm status"]

Unlikely1["✅ Disease Unlikely

• Low suspicion• Monitor pt"]

Further["👁️ Further Testing

• Check new labs• Re-evaluate"]

Confirm["🩺 Disease Confirmed

• Final diagnosis• Start therapy"]

Unlikely2["✅ Disease Unlikely

• Outcome reached• Exit pathway"]

Start --> Prob Prob -->|Low| Sens Prob -->|Moderate| Bal Prob -->|High| Spec

Sens --> NegQ Bal --> Pat Spec --> PosQ

NegQ -->|Yes| Unlikely1 NegQ -->|No| Further

PosQ -->|Yes| Confirm PosQ -->|No| Unlikely2

style Start fill:#FEF8EC, stroke:#FBECCA, stroke-width:1.5px, rx:12, ry:12, color:#854D0E style Prob fill:#FEF8EC, stroke:#FBECCA, stroke-width:1.5px, rx:12, ry:12, color:#854D0E style Sens fill:#FFF7ED, stroke:#FFEED5, stroke-width:1.5px, rx:12, ry:12, color:#C2410C style Bal fill:#FFF7ED, stroke:#FFEED5, stroke-width:1.5px, rx:12, ry:12, color:#C2410C style Spec fill:#FFF7ED, stroke:#FFEED5, stroke-width:1.5px, rx:12, ry:12, color:#C2410C style NegQ fill:#FEF8EC, stroke:#FBECCA, stroke-width:1.5px, rx:12, ry:12, color:#854D0E style Pat fill:#FEF8EC, stroke:#FBECCA, stroke-width:1.5px, rx:12, ry:12, color:#854D0E style PosQ fill:#FEF8EC, stroke:#FBECCA, stroke-width:1.5px, rx:12, ry:12, color:#854D0E style Unlikely1 fill:#F6F5F5, stroke:#E7E6E6, stroke-width:1.5px, rx:12, ry:12, color:#525252 style Further fill:#EEFAFF, stroke:#DAF3FF, stroke-width:1.5px, rx:12, ry:12, color:#0369A1 style Confirm fill:#F7F5FD, stroke:#F0EDFA, stroke-width:1.5px, rx:12, ry:12, color:#6B21A8 style Unlikely2 fill:#F6F5F5, stroke:#E7E6E6, stroke-width:1.5px, rx:12, ry:12, color:#525252

> ⭐ **Clinical Pearl**: The **"rule of 95"** guides test selection - tests with **>95% sensitivity** excel for ruling out disease, while tests with **>95% specificity** excel for ruling in disease. Tests with both metrics **>95%** represent ideal diagnostic tools but are rare and expensive.

**Performance Trade-off Analysis:**

* **Sensitivity ↑, Specificity ↓**: More false positives, fewer missed cases

* **Specificity ↑, Sensitivity ↓**: More missed cases, fewer false positives

* **Balanced Approach**: Moderate performance in both domains

* **Sequential Testing**: Combine high-sensitivity screening with high-specificity confirmation

> 💡 **Master This**: Diagnostic excellence requires matching test characteristics to clinical consequences. Prioritize sensitivity when missing disease proves catastrophic; prioritize specificity when false positives trigger harmful interventions or consume excessive resources.

Connect these performance comparisons through likelihood ratio analysis to understand how test results modify pre-test probability into post-test probability for clinical decision-making.

---

⚖️ The Diagnostic Discrimination Matrix: Comparative Performance Analysis

🎯 The Evidence-Based Treatment Algorithm: Diagnostic-Driven Decisions

Evidence-based treatment algorithms integrate diagnostic test performance with clinical outcomes data to create systematic decision frameworks. Understanding how sensitivity and specificity translate into treatment decisions enables optimal patient management while minimizing both missed diagnoses and unnecessary interventions.

Treatment Decision Framework:

- High-Sensitivity Negative: >95% confidence to withhold treatment

- Negative D-dimer: Avoid anticoagulation for PE

- Negative troponin: Discharge from chest pain protocol

- Negative mammogram: Routine screening interval

- High-Specificity Positive: >95% confidence to initiate treatment

- Positive biopsy: Proceed with cancer treatment

- Positive angiogram: Schedule cardiac intervention

- Positive culture: Start targeted antibiotic therapy

📌 Remember: TREAT-WITHHOLD-CONFIRM algorithm - TREAT on high-specificity positive results, WITHHOLD treatment on high-sensitivity negative results, CONFIRM with additional testing for intermediate results. Each decision requires specific performance thresholds.

| Clinical Decision | Test Requirement | Performance Threshold | Treatment Outcome | Error Consequence |

|---|---|---|---|---|

| Withhold Treatment | High sensitivity negative | >95% sensitive | Avoid unnecessary intervention | Missed disease if false negative |

| Initiate Treatment | High specificity positive | >95% specific | Targeted intervention | Unnecessary treatment if false positive |

| Emergency Treatment | High sensitivity positive | >90% sensitive | Rapid intervention | Overtreatment acceptable |

| Expensive Treatment | High specificity positive | >98% specific | Cost-effective care | Resource waste if false positive |

| Risky Intervention | High specificity positive | >99% specific | Justified risk | Harm if false positive |

- Treatment Threshold: Probability above which treatment benefits exceed risks

- Testing Threshold: Probability below which testing changes management

- No-Test Zone: Intermediate probabilities where testing provides minimal value

- Cost-Effectiveness: Balance test costs against treatment outcomes

⭐ Clinical Pearl: The "treatment threshold" represents the disease probability where treatment benefits equal treatment risks. Tests must shift post-test probability across this threshold to influence clinical decisions. For myocardial infarction, this threshold approximates 15-20% probability.

Sequential Testing Strategies:

- Parallel Testing: Multiple tests simultaneously for rapid diagnosis

- Emergency department chest pain protocols

- Cancer staging with multiple imaging modalities

- Infectious disease panels for sepsis workup

- Serial Testing: Sequential tests based on prior results

- Screening mammography followed by diagnostic imaging

- Initial troponin followed by serial measurements

- HIV screening followed by confirmatory testing

💡 Master This: Clinical decision-making excellence requires understanding how diagnostic test performance translates into treatment decisions. Match test characteristics to decision thresholds, considering both the consequences of missed diagnoses and unnecessary treatments.

Connect these treatment algorithms through multi-system integration to understand how diagnostic strategies vary across different medical specialties and clinical contexts.

🎯 The Evidence-Based Treatment Algorithm: Diagnostic-Driven Decisions

🌐 The Multi-System Integration Network: Specialty-Specific Applications

Specialty-Specific Performance Requirements:

-

Emergency Medicine: Prioritizes sensitivity (>95%) for life-threatening conditions

- Cannot miss MI, PE, stroke, sepsis

- Accepts higher false positive rates

- Time-sensitive decision making

- Resource availability less constrained

-

Primary Care: Balances sensitivity and specificity (85-95% each)

- Population screening focus

- Cost-effectiveness critical

- Long-term patient relationships

- Referral system available

-

Oncology: Demands specificity (>98%) for treatment decisions

- Toxic treatments require certainty

- Staging accuracy critical

- Prognosis depends on precision

- False positives catastrophic

📌 Remember: EMERGENCY-PRIMARY-SPECIALTY hierarchy - EMERGENCY medicine prioritizes sensitivity to avoid missing critical diagnoses, PRIMARY care balances both metrics for population health, SPECIALTY care demands specificity for targeted interventions.

| Medical Specialty | Sensitivity Priority | Specificity Priority | Clinical Rationale | Performance Target |

|---|---|---|---|---|

| Emergency Medicine | Very High >95% | Moderate 70-85% | Cannot miss life-threatening disease | Miss nothing critical |

| Primary Care | High 85-95% | High 85-95% | Population screening efficiency | Balanced approach |

| Oncology | Moderate 80-90% | Very High >98% | Treatment toxicity requires certainty | Confirm before treating |

| Cardiology | High 90-95% | High 90-95% | Both MI and unnecessary procedures problematic | Balanced precision |

| Infectious Disease | High 90-95% | Moderate 75-85% | Antibiotic resistance vs untreated infection | Catch infections early |

- Handoff Communication: Test interpretation across specialties

- Resource Allocation: Different specialties competing for testing resources

- Quality Metrics: Balancing sensitivity and specificity across departments

- Cost Management: Specialty-specific testing strategies impact overall costs

⭐ Clinical Pearl: Cross-specialty communication requires understanding different performance priorities. Emergency physicians accept 10-15% false positive rates to achieve >99% sensitivity, while oncologists demand <2% false positive rates even if sensitivity drops to 85-90%.

Advanced Integration Concepts:

- Artificial Intelligence: Machine learning algorithms optimizing sensitivity/specificity trade-offs

- Precision Medicine: Genetic testing requiring >99% specificity for treatment selection

- Telemedicine: Remote diagnostics with modified performance requirements

- Point-of-Care Testing: Rapid results with acceptable performance compromises

Population Health Considerations:

- Screening Programs: Population-level sensitivity requirements

- Health Disparities: Performance variations across demographic groups

- Resource-Limited Settings: Modified thresholds based on available resources

- Public Health: Disease surveillance requiring high sensitivity

💡 Master This: Healthcare system excellence requires understanding how diagnostic performance requirements vary across specialties and integrating these differences into coherent patient care pathways. Optimize test selection based on specialty-specific priorities while maintaining system-wide quality and efficiency.

Connect these multi-system insights through rapid mastery frameworks to develop practical tools for immediate clinical application across diverse medical contexts.

🌐 The Multi-System Integration Network: Specialty-Specific Applications

🚀 The Clinical Mastery Toolkit: Rapid Reference Arsenal

Essential Performance Thresholds:

- Screening Excellence: Sensitivity >95%, Specificity >85%

- Confirmation Gold Standard: Sensitivity >85%, Specificity >98%

- Emergency Protocols: Sensitivity >99%, Specificity >70%

- Cost-Effective Balance: Both metrics >90%

- Population Health: Sensitivity >90%, Specificity >80%

📌 Remember: 95-85-99-90-90 thresholds - 95% sensitivity for screening, 85% minimum specificity, 99% sensitivity for emergencies, 90% for cost-effectiveness, 90% for population health. These numbers guide rapid test selection decisions.

Rapid Decision Matrix:

| Clinical Context | Sensitivity Target | Specificity Target | Primary Concern | Secondary Concern |

|---|---|---|---|---|

| Life-Threatening | >99% | >70% | Missing disease | Resource utilization |

| Screening Program | >95% | >85% | Population coverage | False positive burden |

| Expensive Treatment | >85% | >98% | Treatment justification | Missing candidates |

| Routine Diagnosis | >90% | >90% | Balanced accuracy | Overall efficiency |

| Research Study | >95% | >95% | Scientific validity | Reproducibility |

Clinical Commandments:

- SNOUT before SPIN - Screen with sensitivity, confirm with specificity

- Context drives choice - Emergency vs elective determines priority

- Numbers need context - Performance varies by population and prevalence

- Perfect tests don't exist - Every test involves trade-offs

- Sequential beats single - Combine tests strategically for optimal performance

💡 Master This: Diagnostic mastery requires instant recognition of performance thresholds and their clinical implications. Memorize the essential numbers, understand the trade-offs, and apply systematic frameworks to optimize patient care across all clinical contexts.

Advanced Mastery Framework:

- Pattern Recognition: Instant identification of optimal test characteristics

- Trade-off Analysis: Rapid assessment of sensitivity vs specificity priorities

- Context Integration: Specialty-specific performance requirements

- Resource Optimization: Cost-effective diagnostic strategies

- Quality Assurance: Systematic approach to diagnostic excellence

Connect these mastery tools through continuous clinical application to develop expert-level diagnostic decision-making capabilities that optimize patient outcomes while maximizing healthcare system efficiency.

🚀 The Clinical Mastery Toolkit: Rapid Reference Arsenal

Practice Questions: Sensitivity/Specificity

Test your understanding with these related questions

A scientist in Chicago is studying a new blood test to detect Ab to EBV with increased sensitivity and specificity. So far, her best attempt at creating such an exam reached 82% sensitivity and 88% specificity. She is hoping to increase these numbers by at least 2 percent for each value. After several years of work, she believes that she has actually managed to reach a sensitivity and specificity much greater than what she had originally hoped for. She travels to China to begin testing her newest blood test. She finds 2,000 patients who are willing to participate in her study. Of the 2,000 patients, 1,200 of them are known to be infected with EBV. The scientist tests these 1,200 patients' blood and finds that only 120 of them tested negative with her new exam. Of the patients who are known to be EBV-free, only 20 of them tested positive. Given these results, which of the following correlates with the exam's specificity?